Lecture 2

This lecture can be seen as a summary of the Rust performance book. Although this lecture is independently created from that book, the Rust performance book is a good resource, and you will see many of the concepts described here come back in-depth there. These lecture notes strictly follow the live lectures, so we may skip one or two things that may interest you in the Rust performance book. We highly recommend you to use it when doing Assignment 2

Contents

- Benchmarking

- Profiling

- What does performance mean?

- Making code faster by being clever

- Calling it less

- Making existing programs faster

- Resources

Benchmarking

Rust is often regarded as quite a performant programming language. Just like C and C++, Rust code is compiled into native machine code, which your processor can directly execute. That gives Rust a leg up over languages like Java or Python, which require a costly runtime and virtual machine in which they execute. But having a fast language certainly doesn't say everything.

The reason is that the fastest infinite loop in the world is still slower than any program that terminates. That's a bit hyperbolic of course, but simply the fact that Rust is considered a fast language, doesn not mean that any program you write in it is immediately as fast as it can be. In these notes, we will talk about some techniques to improve your Rust code and how to find what is slowing it down.

Initially, it is important to learn about how to identify slow code. We can use benchmarks for this. A very useful tool to

make benchmarks for rust code is called criterion.

Criterion itself has lots of documentation on how to use it. Let's look at a little example from the documentation.

Below, we benchmark a simple function that calculates Fibonacci numbers:

use criterion::{black_box, criterion_group, criterion_main, Criterion};

fn fibonacci(n: u64) -> u64 {

match n {

0 => 1,

1 => 1,

n => fibonacci(n-1) + fibonacci(n-2),

}

}

pub fn fibonacci_benchmark(c: &mut Criterion) {

c.bench_function("benchmarking fibonacci", |b| {

// if you have setup code that shouldn't be benchmarked itself

// (like constructing a large array, or initializing data),

// you can put that here

// this code, inside `iter` is actually measured

b.iter(|| {

// a black box disables rust's optimization

fibonacci(black_box(20))

})

});

}

criterion_group!(benches, fibonacci_benchmark);

criterion_main!(benches);With cargo bench you can run all benchmarks in your project. It will store some data in your target folder to record

what the history of benchmarks is that you have ran. This means that if you change your implementation (of the Fibonacci

function in this case), criterion will tell you whether your code has become faster or slower.

It's important to briefly discuss the black_box. Rust is quite smart, and sometimes optimizes away the code that you

wanted to run!

For example, maybe Rust notices that the fibonacci function is quite simple and that fibonacci(20) is simply 28657.

And because the Fibonacci function has no side effects (we call such a function "pure"), it may just insert at compile time the

number 28657 everywhere you call fibonacci(20).

That would mean that this benchmark will always tell you that your code runs in pretty much 0 seconds. That'd not be ideal.

The black box simply returns the value you pass in. However, it makes sure the rust compiler cannot follow what is going

on inside. That means, the Rust compiler won't know in advance that you call the fibonacci function with the value 20,

and also won't optimize away the entire function call. Using black boxes is pretty much free, so you can sprinkle them

around without changing your performance measurements significantly, if at all.

Criterion tells me that the example above takes roughly 15µs on my computer.

It is important to benchmark your code after making a change to ensure you are actually improving the performance of your program.

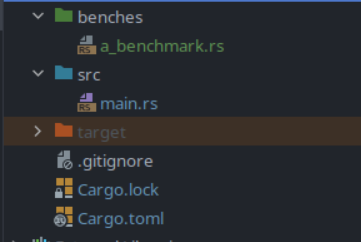

You usually put benchmarks in a folder next to

srccalledbenches, just like how you would put integration tests in a folder calledtest. Cargo understands these folders, and when you put rust files in those special folders, you can import your main library from there, so you can benchmark functions from them.

Profiling

Profiling your code is, together with benchmarking, is one of the most important tools

for improving the performance of your code. In general, profiling will show you where in your

program you spend the most amount of time. However, there are also more specific purpose profiling tools

like cachegrind which can tell you about cache hits amongst other things, some of these are contained with the valgrind program.

You can find a plethora of profilers in the rust perf book.

Profiling is usually a two-step process where you first collect data and analyse it afterwards.

Because of the data collection it is beneficial to capture program debug information,

however this is, by default, disabled for Rust's release profile. To enable this and to thus capture

more useful profiling data add the following to your Cargo.toml:

[profile.release]

debug = true

Afterwards you can either use the command line perf tool or your IDE's integration,

specifically CLion has great support for profiling built-in1.

perf install guide

We would like to provide an install guide, but for every linux distro this will be different

It's probably faster to Google "How to install perf for (your distro)" than to ask the TA's

If using the command line you can run the following commands, to profile your application:

cargo build --release

perf record -F99 --call-graph dwarf -- ./target/release/binary

Afterwards you can view the generated report in a TUI (Text-based User Interface) using (press '?' for keybinds):

perf report --hierarchy

Using this data you can determine where your program spends most of its time, with that you can look at those specific parts in your code and see what you can improve there.

You can also try to play around with this on your Grep implementation of assignment one,

to get a feel of how it works (e.g. try adding a sleep somewhere).

Before running it on the bigger projects like that of assignment 2.

Important for users of WSL1: perf does not properly work on WSL1, you can check if you run WSL1 by executing

wsl --list --verbose, if this command fails you are for sure running WSL 1, otherwise check the version column, if it says1for the distro you are using, you can upgrade it usingwsl --set-version (distro name) 2.

For some people it might be easier to use cargo flamegraph, you can install this using cargo install flamegraph.

This will create an SVG file in the root folder which contains a nice timing graph of your executable.

What does performance mean?

So far, we've only talked about measuring the time performance of a program. This is of course not the only measure of performance. For example, you could also measure the performance of a program in memory usage. In these lecture notes we will mostly talk about performance related to execution speed, but this is always a tradeoff. Some techniques which increase execution speed also increase program size. Actually, decreasing th memory usage of a program can sometimes yield speed increases. We will briefly talk about this throughout these notes, for example in the section about optimization levels we will also mention optimizing for code size.

Making code faster by being clever

The first way to improve performance of code, which is also often the best way, is to be smarter (or rely on the smarts of others). For example, if you "simply" invent a new algorithm that scales linearly instead of exponentially this will be a massive performance boon. In most cases, this is the best way to improve the speed of programs, especially when the program has to scale to very large input sizes. Some people have been clever and have invented all kinds of ways to reduce the complexity of certain pieces of code. However, we will not talk too much about this here. Algorithmics is a very interesting subject, and we many dedicated courses on it here at the TU Delft.

In these notes, we will instead talk about more general techniques you can apply to improve the performance of programs. Techniques where you don't necessarily need to know all the fine details of the program. So, let us say we have a function that is very slow. What can we do to make it faster? We will talk about two broad categories of techniques. Either:

- You make the function itself faster

- You try to call the function (or nested functions) less often. If the program does less work, it will often also take less time.

Calling it less

Let's start with the second category, because that list is slightly shorter.

Memoization

Let's look at the Fibonacci example again. You may remember it from the exercises from Software Fundamentals. If we want

to find the 100th Fibonacci number, it will probably take longer than your life to terminate. That's because to

calculate

fibonacci(20), first, we will calculate fibonacci(19) and fibonacci(18). But actually, calculating fibonacci(19)

will

again calculate fibonacci(18). Already, we're duplicating work. Our previous fibonacci(20) example will

call fibonacci(0)

a total of 4181 times.

Of course, we could rewrite the algorithm with a loop. But some people really like the recursive definition of the Fibonacci function because it's quite concise. In that case, memoization can help. Memoization is basically just a cache. Every time a function is called with a certain parameter, an entry is made in a hashmap. The key of the entry is the parameters of the function, and the value is the result of the function call. If the function is then again called with the same parameters, instead of running the function again, the memoized result is simply returned. For the fibonacci function that looks something like this:

use std::collections::HashMap; fn fibonacci(n: u64, cache: &mut HashMap<u64, u128>) -> u128 { // let's see. Did we already calculate this value? if let Some(&result) = cache.get(&n) { // if so, return it return result; } // otherwise, let's calculate it now let result = match n { 0 => 0, 1 => 1, n => fibonacci(n-1, cache) + fibonacci(n-2, cache), }; // and put it in the cache for later use cache.insert(n, result); result } fn main() { let mut cache = HashMap::new(); println!("{}", fibonacci(100, &mut cache)); }

(run it! You'll see it calculates fibonacci(100) in no-time. Note that I did change the result to a u128,

because fibonacci(100) is larger than 64 bits.)

Actually, this pattern is so common, that there are standard tools to do it. For example,

the memoize crate allows you to simply annotate a function. The code to do the

caching is then automatically generated.

use memoize::memoize;

#[memoize]

fn fibonacci(n: u64) -> u128 {

match n {

0 => 1,

1 => 1,

n => fibonacci(n-1) + fibonacci(n-2),

}

}

fn main() {

println!("{}", fibonacci(100));

}One thing you may have observed, is that this cache should maybe have a limit. Otherwise, if you call the function often enough, the program may start to use absurd amounts of memory. To avoid this, you may choose to use an eviction strategy.

The memoize crate allows you to specify this by default, by specifying a maximum capacity or a time to live (for example, an entry is deleted after 2 seconds in the cache). Look at their documentation, it's very useful.

Lazy evaluation and iterators

Iterators in Rust are lazily evaluated. This means they don't perform calculations unless absolutely necessary. For example if we take the following code:

fn main() { let result = || { vec![1, 2, 3, 4].into_iter().map(|i: i32| i.pow(2)).nth(2) }; println!("{}", result().unwrap()); }

Rust will only do the expensive pow operation on the third value, as that is the only

value we end up caring about (with the .nth(_) call). If you combine many iterator calls after each other

they can also be automatically combined for better performance. You can find the available methods on iterators

in the Rust documentation.

I/O buffering

Programs communicate with the kernel through system calls. It uses them to read and write from files, print to the screen and to send and receive messages from a network. However, each system call has considerable overhead. An important technique that can help reduce this overhead is called I/O buffering.

Actually, when you are printing, you are already using I/O buffering. When you print a string in Rust or C, the characters are not individually written to the screen. Instead, nothing is shown at all when you print. Only when you print a newline character, will your program actually output what you printed previously, all at once.

When you use files, you can make this explicit. For example, let's say we want to process a file 5 bytes at a time. We could write:

use std::fs::File; use std::io::Read; fn main() { let mut file = File::open("very_large_file.txt").expect("the file to open correctly"); let mut buf = [0; 5]; while file.read_exact(&mut buf).is_ok() { // process the buffer } }

However, every time we run read_exact, we make (one or more) system calls. But with a very simple change, we can reduce that

a lot:

use std::fs::File; use std::io::{BufReader, Read}; fn main() { let file = File::open("very_large_file.txt").expect("the file to open correctly"); let mut reader = BufReader::new(file); let mut buf = [0; 5]; while reader.read_exact(&mut buf).is_ok() { // process the buffer } }

Only one line was added, and yet this code makes a lot fewer system calls. Every time read_exact is run,

either the buffer is empty, and a system call is made to read as much as possible. Then, every next time

read_exact is used, it will use up the buffer instead, until the buffer is empty.

Lock contention

When lots of different threads are sharing a Mutex and locking it a lot, then most threads to spend most of their time waiting on the mutex lock, rather than actually performing useful operations. This is called Lock contention.

There are various ways to prevent or reduce lock contention.

The obvious, but still important, way is to really check if you need a Mutex.

If you don't need it, and can get away by e.g. using just an Arc<T> that immediately

improves performance.

Another way to reduce lock contention is to ensure you hold on to a lock

for the minimum amount of time required. Important to consider for this

is that Rust unlocks the Mutex as soon as it gets dropped, which is usually at the end of scope

where the guard is created. If you want to make really sure the Mutex gets unlocked, you can manually

drop it using drop(guard).

Consider the following example:

use std::thread::scope; use std::sync::{Arc, Mutex}; use std::time::{Instant, Duration}; fn expensive_operation(v: i32) -> i32 { std::thread::sleep(Duration::from_secs(1)); v+1 } fn main() { let now = Instant::now(); let result = Arc::new(Mutex::new(0)); scope(|s| { for i in 0..3 { let result = result.clone(); s.spawn(move || { let mut guard = result.lock().unwrap(); *guard += expensive_operation(i); }); } }); println!("Elapsed: {:.2?}", now.elapsed()); #}

Here we lock the guard for the result before we run the expensive_operation.

Meaning that all other threads are waiting for the operation to finish rather than

running their own operations. If we instead change the code to something like:

use std::thread::scope; use std::sync::{Arc, Mutex}; use std::time::{Instant, Duration}; fn expensive_operation(v: i32) -> i32 { std::thread::sleep(Duration::from_secs(1)); v+1 } fn main() { let now = Instant::now(); let result = Arc::new(Mutex::new(0)); scope(|s| { for i in 0..3 { let result = result.clone(); s.spawn(move || { let temp_result = expensive_operation(i); *result.lock().unwrap() += temp_result; }); } }); println!("Elapsed: {:.2?}", now.elapsed()); #}

The lock will only be held for the minimum time required ensuring faster execution.

Moving operations outside a loop

It should be quite obvious, that code inside a loop is run more often than code outside a loop. However, that means that any operation you don't do inside a loop, and instead move outside it, saves you time. Let's look at an example:

#![allow(unused)] fn main() { fn example(a: usize, b: u64) { for _ in 0..a { some_other_function(b + 3) } } }

The parameter to the other function is the same every single repetition. Thus, we could do the following to make our code faster:

#![allow(unused)] fn main() { fn example(a: usize, b: u64) { let c = b + 3; for _ in 0..a { some_other_function(c) } } }

Actually, in a lot of cases, even non-trivial ones, the compiler is more than smart enough to do this for you. The assembly generated for the first example shows this:

example::main:

push rax

test rdi, rdi

je .LBB0_3

add rsi, 3 ; here we add 3

mov rax, rsp

.LBB0_2: ; this is where the loop starts

mov qword ptr [rsp], rsi

dec rdi

jne .LBB0_2

.LBB0_3: ; this is where the loop ends

pop rax

ret

However, in very complex cases, the compiler cannot always figure this out.

To easily see what optimizations the Rust compiler makes, I highly recommend the Compiler Explorer. I used it to generate the examples above. Here's a link to my setup for that. Try to make some changes, and see if the compiler still figures it out!

Static allocation / reducing allocation

Dynamic memory allocation takes time. Every time malloc is called, the program spends a few nanoseconds handling that request.

(this blog post has got some very cool benchmarks!).

In Rust, malloc can sometimes be a bit hidden. As a rule of thumb, malloc is used when performing any of the following

operations:

Vec::new(),HashMap::new(),String::new(), and sometimes when inserting into any of these.Box::new()- Cloning any of the above

Arc::new()orRc::new()(note: cloning does not performmallocfor these types, which makes them extra useful)

Using dynamic memory allocation is sometimes unavoidable, and often very convenient. But when you are trying to optimize code for performance, it is useful to look at what allocations can be avoided, and which can be moved out of loops (and maybe can be reused between loop iterations). The best-case scenario is of course when you can remove allocations altogether. For example, by only using fixed-size arrays, and references to values on the stack.

Different allocations

Next week, when we talk about embedded Rust, you will see that we start using a custom memory allocator. The reason we will do that there, is that we will be programming for an environment where there is no Operating System providing an allocator. However, there are faster allocators out there than Rust's default. You can experiment with using a different one, and see how the performance changes.

Arena allocators

Another way to reduce allocations, is using an arena allocator. An arena allocator is a very fast memory allocator

which often does not support freeing individual allocations. You can only allocate more and more, until you delete

the entire arena all at once. For example, when you are rendering a video, you could imagine having an arena for every

frame that is rendered. All the memory is allocated at the start, and then that frame renders, storing intermediate results

in the arena. After rendering, only the final frame/image is left, and the entire arena can be deallocated.

typed_arena is a crate which provides an arena allocator for homogeneous types,

while bumpalo allows storage of differently typed values inside the arena.

Making existing programs faster

Even when applying all the techniques to write faster code we discussed sofar, there is a limit. That's because you are dependent on a compiler compiling your code into machine code, and your cpu executing that machine code quickly. Not all programs have the same needs, which means that you can gain a lot of performance by configuring the compiler correctly and making sure you take full advantage of the hardware the code is run on.

Inlining

Inlining is a compiler optimization which effectively literally puts the code of a function at its callsite. This eliminates function call overhead. On the flip side this can make the size of your program substantially larger, which may also cause more cache misses.

You can hint the Rust compiler to inline a function by adding the attribute macro #[inline] on top of a function.

Cachegrind can be a useful tool for determining what to inline.

This also has a chapter in the perf book for a more detailed explanation.

Optimization level

Most obvious of all, is the optimization level. By default, Rust compiles your code in optimization-level 0. That's the worst there is. For development, this is great! However, when actually running a program, using a higher optimization level is well worth the increased compilation time. There are 6 optimization settings:

- 0, 1, 2, 3: don't optimize, optimize a bit, optimize a bit more, and optimize a lot respectively

- "s", "z": optimize for size (a bit and a lot respectively)

To get the best performance, often, opt-level 3 is best. However, do try them all and see the difference for yourself.

To specify an optimization level, you have to add profiles to your Cargo.toml file. For example:

[profile.dev]

opt-level = 1

[profile.release]

opt-level = 3 # 3 is the default for release

This says, that if the release profile is selected, the optimization should be set to 3. The default build profile is "dev", which

is what is used when you run cargo run. To select the release profile, you can run cargo run --release.

A separate profile

benchis used while benchmarking, which will inherit from the release profile.

To see what the optimization level does, let's look at an example in godbolt. Here, I created a simple function

which copies a slice, and how it compiles with opt-level 0, opt-level 3 and opt-level "z". Of course, the one with "z" is by far the smallest. The

opt-level 0 still has a lot of functions in it from the standard library, which have all vanished in the opt-level 3 version. In fact, the opt-level

3 version does something very fancy: SIMD. under .LBB0_4, you see that some very weird instructions are used, that operate on very weird registers

like xmm0. Those are the 128 bit registers.inline

Target CPU

Now that we're talking about compiler options, it's worth mentioning the target CPU option. Even opt-level 3 will generate code that is compatible with a lot of different CPUs. However, sometimes, you know exactly on what CPU the code is going to run. With the target-cpu flag, you can specify exactly what model of CPU should be compiled to. You can set it to native to compile for the same CPU as the compiler currently runs on.

Concretely, not all x86 CPUs support the 256 or even 512 bit registers. However, especially for the code from the previous godbolt example, these registers can help a lot (since they can copy more data per instruction). Here you can clearly see the difference between the code generated with a target cpu set, and not set.

Rust even supports running different functions depending on what hardware is available, to do this you can use the target_feature macros,

as shown here.

Link-time optimization

When the Rust compiler compiles your code, every crate forms its own compilation unit. That means that optimizations are usually performed only within a single crate. This also means that when you use many libraries in your program, some optimizations may not be applied. For example, functions from other crates cannot be inlined. You can change this with the "lto" flag in the compilation profile. With LTO, more optimizations are performed after compiling individual crates, when they are linked together into one binary.

Branch prediction

CPUs use branch prediction to determine which branch in a conditional (if, match, etc.) statement is most likely to be taken.

The one it deems most likely it will usually start pre-emptively executing. However, if the CPU guesses the wrong branch this incurs

a penalty or slow down. In Rust, you can hint to the CPU and the Compiler which functions are unlikely to be executed (and thus branches

calling this function are also marked as cold/unlikely) using

the #[cold] attribute macro, also see the rust docs.

#[cold]

fn rarely_executed_function() { }PGO and Bolt

PGO or Profile Guided Optimization is a process by where you generate an execution trace, and then feed that information to the compiler to inform it what code is actually being executed and how much. The compiler can then use this information to optimize the program for a specific workload.

For Rust we can use cargo-pgo to achieve this. Research shows that this can achieve performance improvements of up to 20.4% on top of already optimized programs.

The general workflow for building optimized binaries is something like this:

- Build binary with instrumentation

- Add extra information to your program to facilitate profiling

- Gather performance profiles

- Run a representative workload while gathering data

- Run it for as long as possible, at least a minute

- Build an optimized binary using generated profiles

- Use the data colleceted in the previous step to inform the compiler

- The optimized binary is only optimized for the profiling data and thus specific workloads that are given. The program might perform worse on substantially different workloads.

Zero Cost Abstractions

Rust has many higher level features like closures, collections, iterators, traits, and generics. But these are made in such a way that they try to obey the following principles:

- What you don’t use, you don’t pay for

- What you do use, you couldn’t hand code any better.

Rust calls this a "Zero Cost Abstraction", meaning you should not need to worry too much about the performance penalty these abstractions incur.

Static and Dynamic Dispatch

When code is written to be abstracted over the kind of data it gets, there are two ways to deal with this in Rust.

The first way is to use Generics as was explained in Software Fundamentals. Rust internally does this using something called Monomorphization, which effectively makes a different version of your function for every type it is actually called with. This does of course mean that your binary size will be larger but, you won't pay the overhead it costs to determine this at runtime.

The second way to do this is through something called Trait Objects, this defers the binding of methods until runtime, through something called virtual dispatch. This gives more freedom for writing your code as the compiler does not need to know all the ways you will call this method at compile time, like with generics. However, you do then incur a runtime cost for using this.

Zero-Copy Design

Rust makes it really easy to design programs to be "Zero-Copy", this means that the program continually reinterpret a set of data instead of copying and moving it around. An example you could think of is network packets. Network packets have various headers, like size, which determine what comes afterwards. A naive solution is just to read the headers then copy the data out of it into a struct you've made beforehand. However, this can be very slow if you are receiving lots of data.

This is where you would want to design your program to instead of copying the data into a new struct, have fields which are slices (pointers with a lengths) of memory and populate those accordingly. Many popular libraries in the Rust ecosystem like Serde (the serialization/deserialization library) do exactly this. You can also do it more manually yourself, with help of crates like Google's zerocopy.

Memory Management

Lastly something to consider when optimizing programs is also memory management. The various ways you use or interact with memory can also have performance considerations.

A simple and obvious case is that cloning (or operations that invoke memcpy in general) is usually considered quite slow

it can therefore often beneficial to work with references rather than cloning a lot.

Another thing to take into account is that a lot of performance optimizations are bad for memory usage, think of things like unrolling loops or the aforementioned monomorphization. If you are working on a memory constrained system it might therefore be wise to tune down some optimizations in favour of memory.

Conversely, a bigger memory footprint can also have a negative performance impact in general, this can be due to caches or misaligned accesses. On a modern computer there exists a lot of caches of various sizes, and if a single function can neatly fit into one of those caches it can actually speed up computation heavy programs significantly. This means that sometimes "lower" opt levels can actually be faster. It is therefore yet again very important to note that there is no 'silver bullet' with regards to optimization, and you should always verify that you are actually increasing the speed of your program with the help of benchmarks.

There are also some ways within rust to try and reduce or improve your memory footprint. One of those ways are packed or bit-aligned structs as shown in the rust book. Another way to improve your memory footprint is to use constructs such as bitflags or bitvec which allow you to pack data even more closely together by using the minimum amount of bits you need.

For example, using eight bools in Rust, it uses eight bytes, while eight flags can fit within one u8. 2

extern crate bitflags; use std::mem::size_of; use bitflags::bitflags; struct Bools { a: bool, b: bool, c: bool, d: bool, e: bool, f: bool, g: bool, h: bool, } bitflags! { struct Flags: u8 { const A = 0b00000001; const B = 0b00000010; const C = 0b00000100; const D = 0b00001000; const E = 0b00010000; const F = 0b00100000; const G = 0b01000000; const H = 0b10000000; } } fn main(){ println!("Size of Bools Struct: {} byte(s)", size_of::<Bools>()); // 8 println!("Size of Flags Struct: {} byte(s)", size_of::<Flags>()); // 1 }

Some of these optimizations rust can do itself however.

For example when using a NonZero integer Rust can use that single missing bit

to store the state of an option (either Some or None).

use std::num::NonZeroU8; use std::mem::size_of; fn main() { type MaybeU8 = Option::<NonZeroU8>; println!("Size of MaybeU8: {} byte(s)", size_of::<MaybeU8>()); // 1 }

In conclusion, these are all trade-offs to be made, and no single solution will fit all. So be careful to consider which techniques you apply without thinking or checking.