Software Systems

This is the homepage for the software systems course. You can find most course information here.

This course is part of the CESE masters programme. This course is a continuation of the curriculum of Software Fundamentals and will help prepare you for the Embedded Systems Lab.

For students who did not follow Software Fundamentals

Software Fundamentals is marked as a prerequisite for Software Systems. On the course's website you can find the slides of the course, and read back from the provided lecture notes. The reason it's marked as a prerequisite, is that we expect that you know the basics of the Rust programming language before taking Software Systems.

Software fundamentals was a course exclusively for students that didn't already have a background in computer science. That's because we expect that if you did have a computer science background, you can pick up on Rust's properties pretty quickly from our notes and the rust book. We advise you to read through this, and invest a few hours to get acquainted with Rust before taking Software Systems. What may help is that the assignments of Software Fundamentals are still available on weblab. We won't manually grade any more solutions, but many assignments are fully automatically graded.

Part 1

This course consists of two parts over 8 weeks. The first 4 weeks will have weekly lectures and assignments. In part one, we will have 4 weeks, mainly about three subjects:

- Programming with concurrency

- Measuring and optimizing programs for performance

- Programming for embedded systems (without the standard library). We will spend 2 weeks on this.

This part will be taught by Vivian Roest.

For this part we recommend the book Rust Atomics and Locks by Mara Bos, as further reading material.

Part 2

In part two, which is the rest of the course, we will look at another 3 subjects related to model-based software development. We will cover the following main subjects:

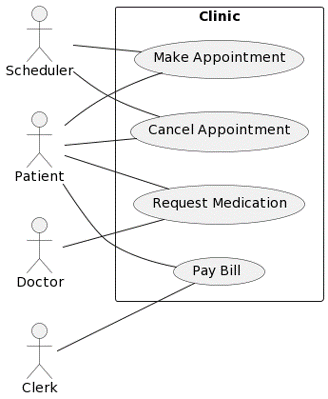

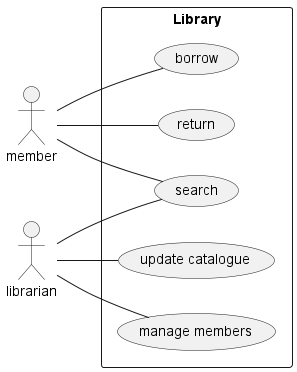

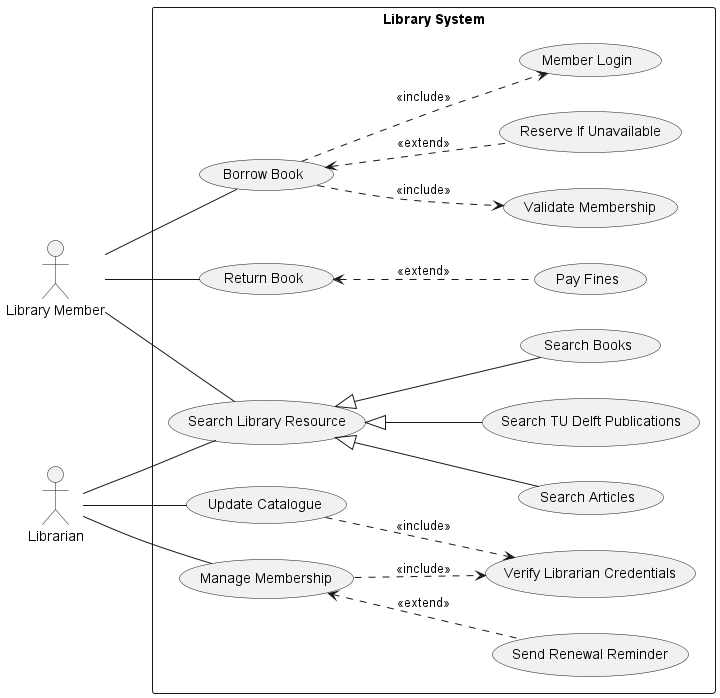

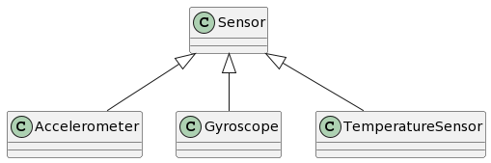

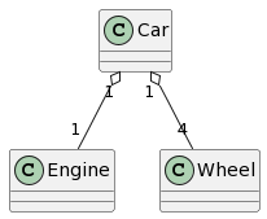

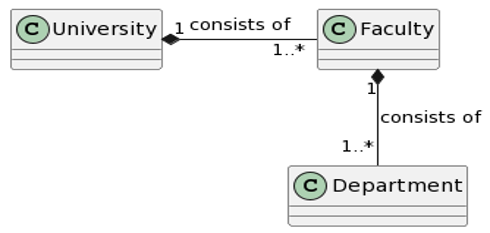

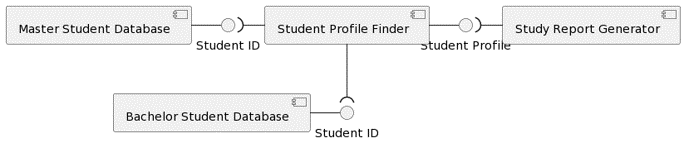

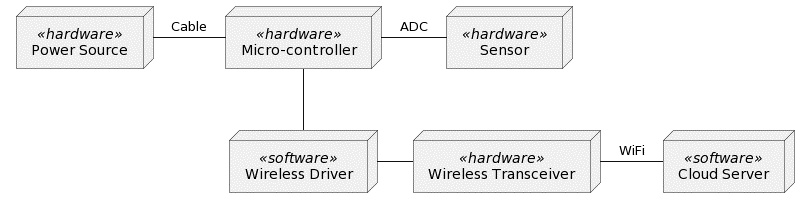

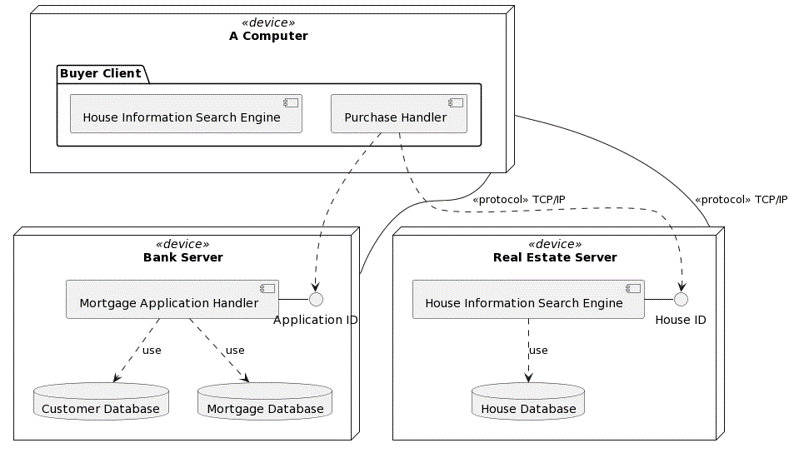

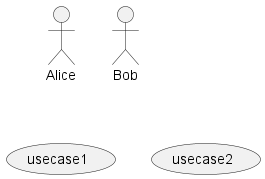

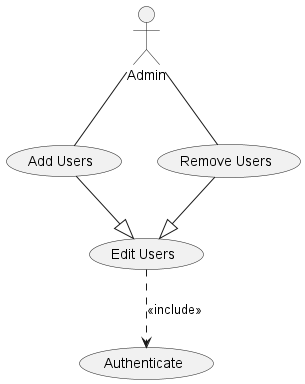

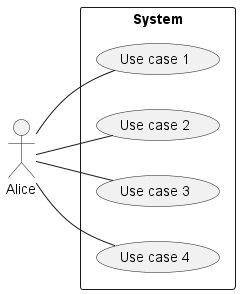

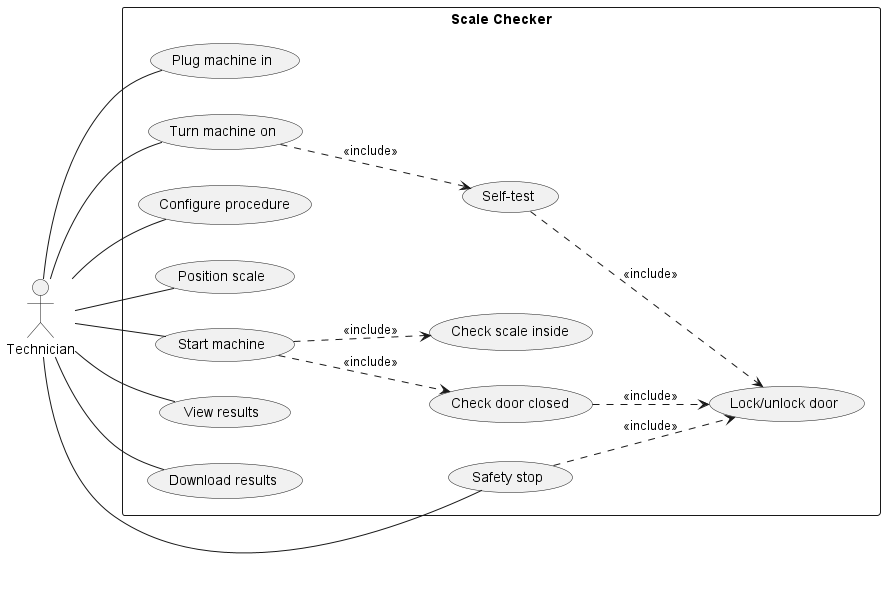

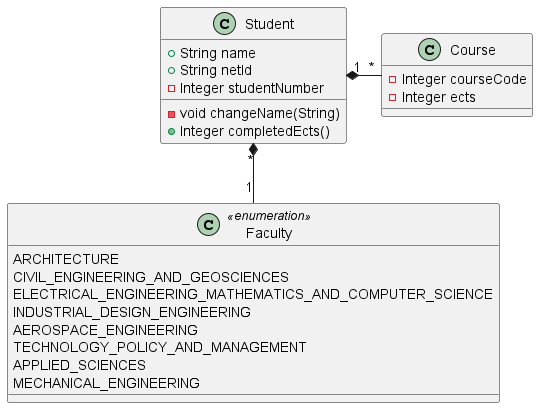

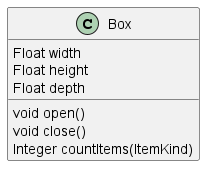

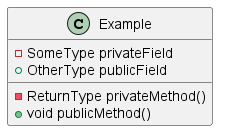

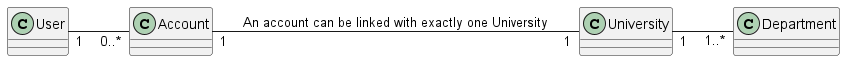

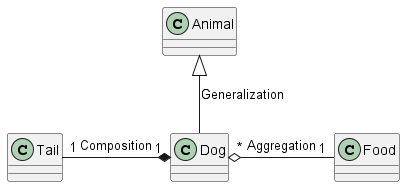

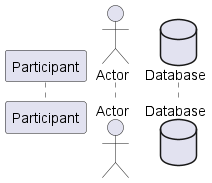

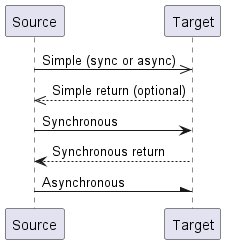

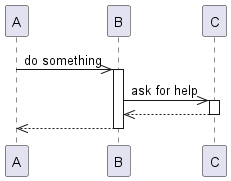

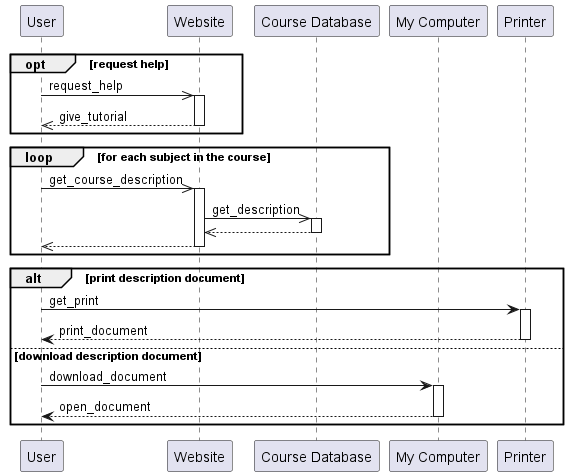

- UML, a standardized modelling language to visualize the design of software

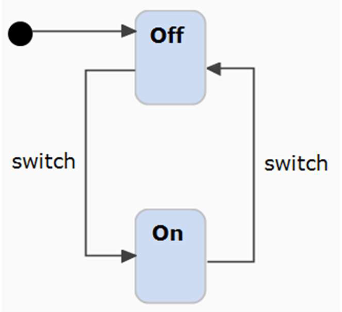

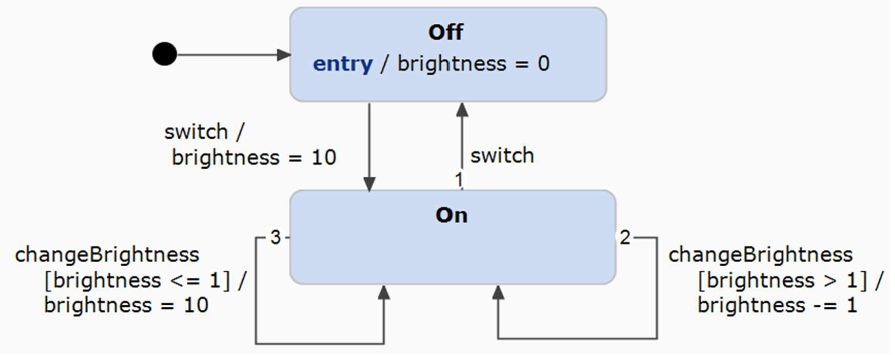

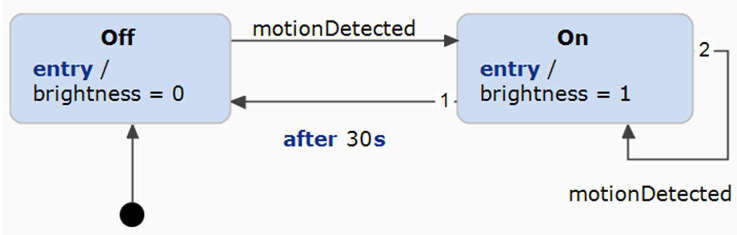

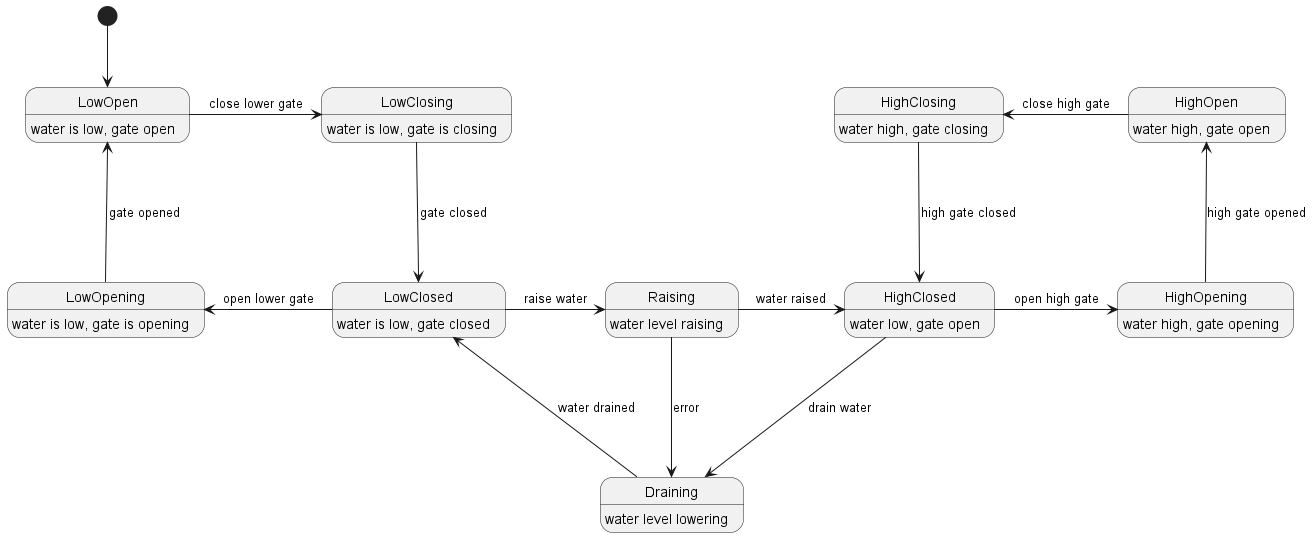

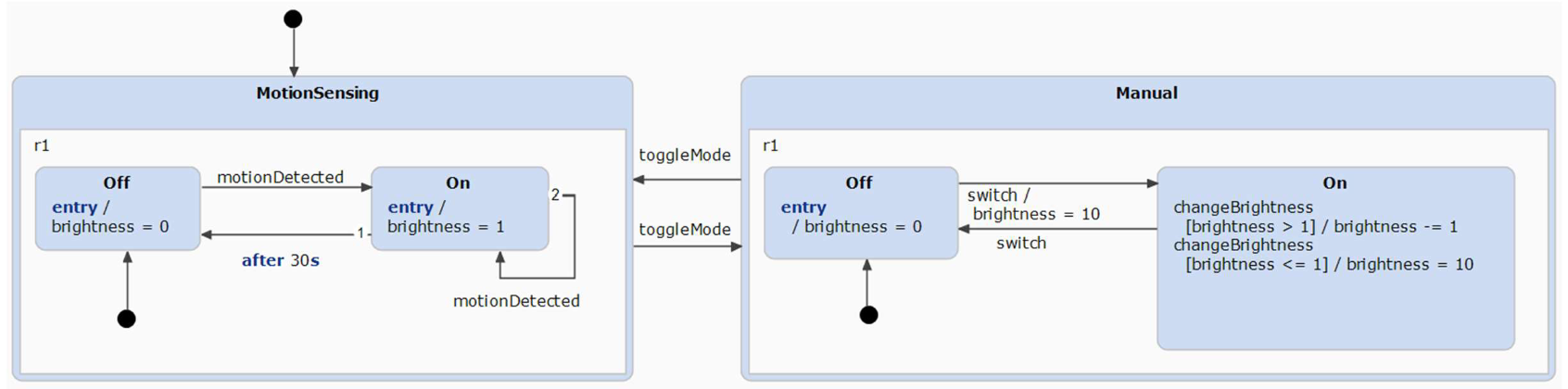

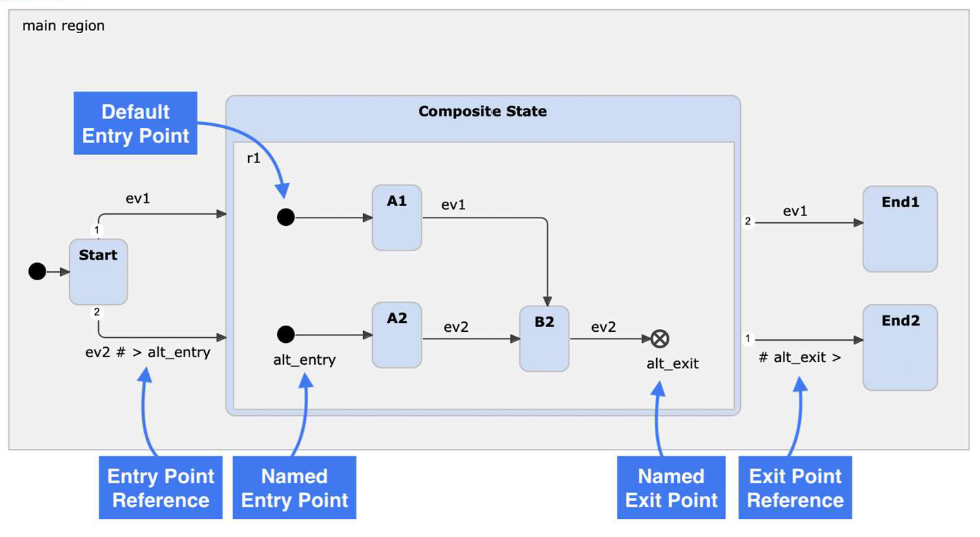

- Finite state machines.

- Domain specific languages and (rust) code generation based on them.

This second part will be taught by Rosilde Corvino and Guohao Lan

Deadlines

In both parts there will be exercises with roughly weekly deadlines. The first deadline for part 1 is already early, in the second week.

You do each assignment in a group of 2. This group will be the same for each assignment, and you can sign up for a group in Brightspace under the "collaboration" tab. After you do so, you get a gitlab repository with a template for the assignment within a day or so.

| Assignment | Graded | deadline always at 18:00 | Percentage of grade |

|---|---|---|---|

| PART ONE | |||

| 1 - Concurrency | ✅ | Wednesday, week 3 (27 nov) | 12.5% |

| 2 - Performance | ✅ | Wednesday, week 4 (4 dec) | 12.5% |

| 3 - Programming ES | ✅ | Wednesday, week 6 (18 dec) | 25.0% |

| PART TWO | |||

| 4 - UML | ✅ | Friday, week 7 (10 jan) | 10% |

| 5 - FSM | ✅ | Friday, week 8 (17 jan) | 10% |

| 6 - DSL | ✅ | Friday, week 10 (31 jan) | 10% |

| 7 - Reflection | ✅ | Friday, week 10 (31 jan) | 20% |

Note that you need a minimum grade of a 5.0 for each of the parts to pass this course.

All assignments (at least for part 1) are published at the start of the course. That means you could technically work ahead. However, note that we synchronized the assignments with the lectures. This means that if you do work ahead, you may be missing important context needed to do the assignments.

The time for the deadline for assignment 2 may look a lot shorter than the other deadlines. That's because we extended the deadline for assignment 1 to give you a little more time at the start of the course, not because the second deadline is actually much shorter.

Late Submissions

When you submit code after the deadline, we will grade you twice. Once on what work you did submit before the deadline, and once what you did after the deadline. However, we will cap the grade from after the deadline at a 6.0.

Labs

Every week (on Thursdays) there will be lab sessions with TAs. In these lab sessions you can ask questions and discuss problems you are having.

Lecture notes

Each lecture will have an associated document with an explanation of what is discussed in the slides. This document will be published under lecture notes. We call these the lecture notes. You can use these to study ahead (before the lecture) which we recommend, so you can ask more specific questions in the lectures. And we do invite you to ask lots of questions! However, in the case you could not make it to a lecture, it should also give you a lot of the information covered in the lectures in writing.

All lecture slides are also published here

Advent of Code

During this course, there will be the yearly advent of code. Every day, a new programming challenge is published, getting harder every day. It's quite fun, and great practice if you haven't been programming for that long yet! There is a course leaderboard with ID (

356604-2d88ced0).Note: this is just for fun. It's not mandatory at all.

Staff

The lectures of this course will be given by Vivian Roest during the first part of the course and by Rosilde Corvino and Guohao Lan during the second part.

During the labs you will mostly interact with the TA team

- George Hellouin de Menibus (Head TA)

- Pepijn Kremers

Contact

If you need to contact us, you can find us at the course email address: softw-syst-ewi@tudelft.nl.

For private matters related to the course, please direct your emails to: Vivian at v.c.roest@student.tudelft.nl, Guohao at G.Lan@tudelft.nl and Rosilde at rosilde.corvino@tno.nl

Note that we prefer that you use the course email address.

Lecture slides

Last Updated: 2024-12-19

Part 1

Part 2 (New)

- Lecture 5 (intro)

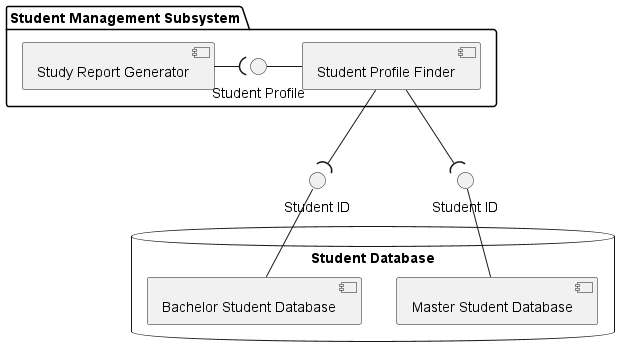

- Lecture 5 (UML, Use case, and component diagrams)

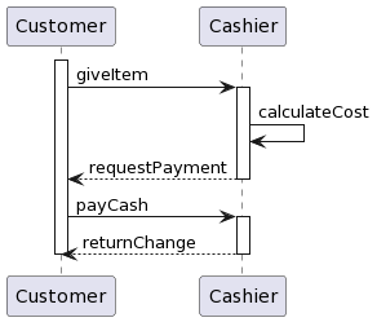

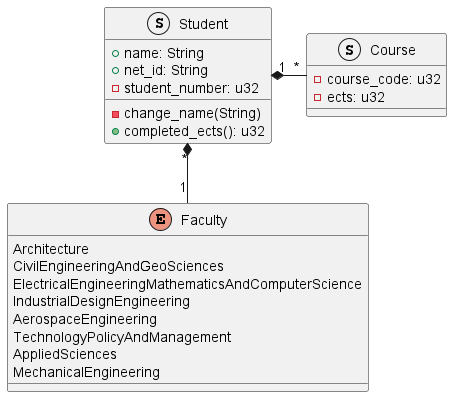

- Lecture 6 (Class and sequence diagrams)

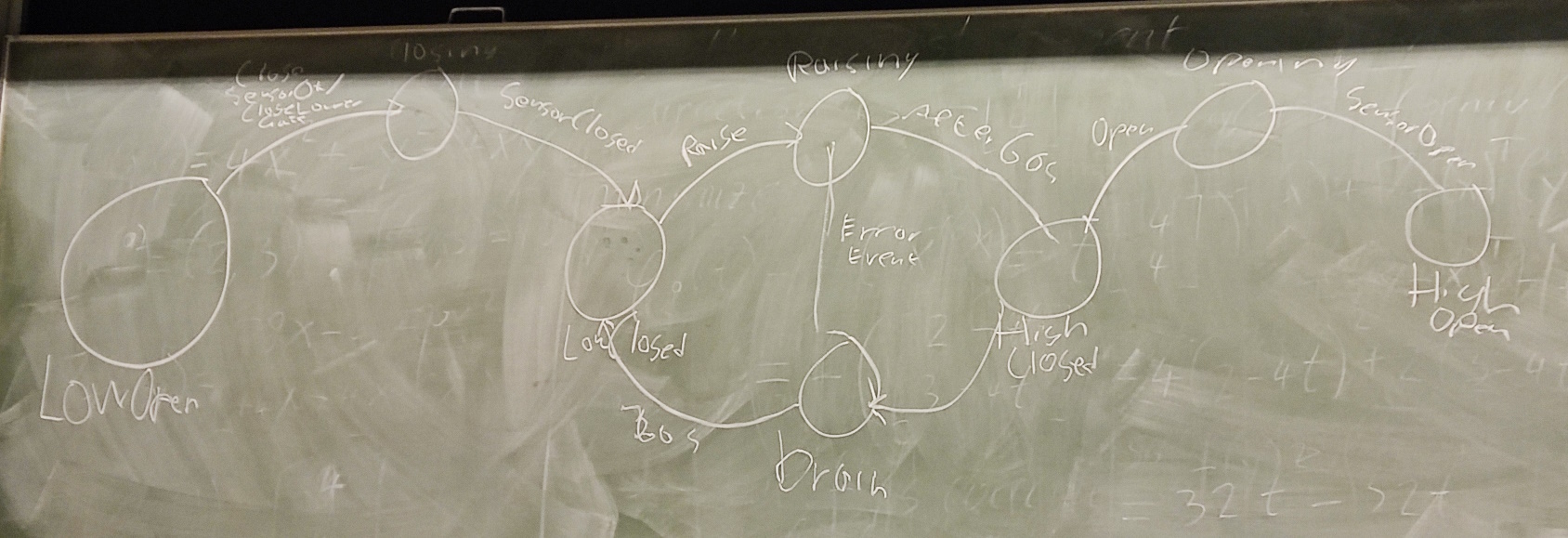

- Lecture 7 (FSM)

- Lecture 8 (DSL and conclusion)

- Jupyter Notebook for UML

Part 2 (last year)

Slides will have changes from last year, but here they are anyways

- Lecture 5 (intro)

- Lecture 5 (UML, Use case diagrams)

- Lecture 6 (Class, component, deployment, and sequence diagrams)

- Lecture 7 (FSM basics)

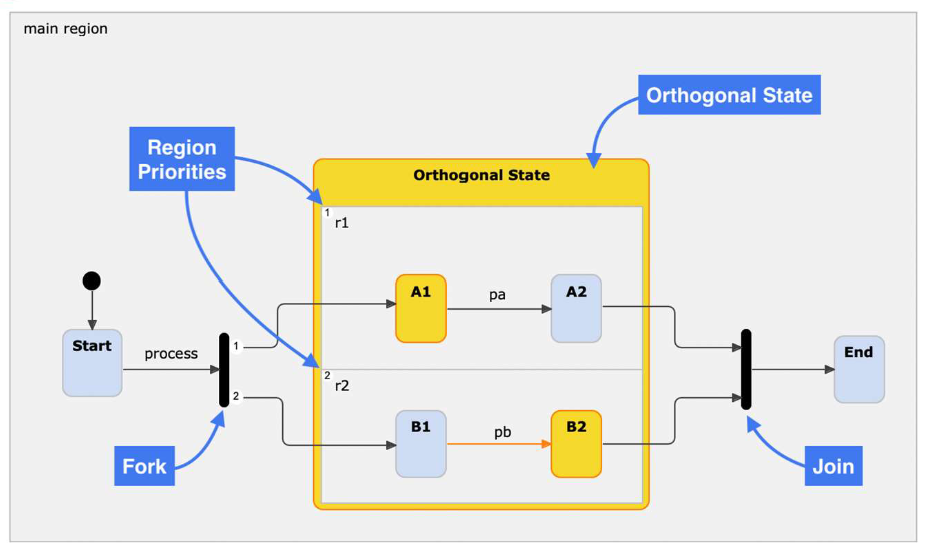

- Lecture 7 (Composite states, history nodes, orthogonal states)

- Lecture 8 (DSL and conclusion)

Software Setup

Linux

Note on Ubuntu

If you have Ubuntu:

- Be aware that Ubuntu is not recommended by this course

- Carefully read the text below!

- DO NOT INSTALL

rustcorcargoTHROUGHapt. Install using official installation instructions and userustup- before installation, make sure you have

build-essential,libfontconfigandlibfontconfig1-devinstalled- Be prepared for annoying problems

We recommend you to use Linux for this course. It is not at all impossible to run the software on Windows or OSX, in fact, it might work quite well. However, not all parts of for example the final project have been tested on these systems.

Since everything has been tested on Linux, this is what we can best give support on. On other platforms, we cannot always guarantee this, although we will try!

If you're new to Linux, we highly recommend Fedora Linux to get started.

You might find that many online resources will recommend Ubuntu to get started, but especially for Rust development this

may prove to be a pain. If you install Rust through the official installer (rustup), it can work just fine on Ubuntu.

However, if you installed rust through apt it will not work.

Fedora also provides a gentle introduction to Linux, but will likely provide a better experience. For this course and for pretty much all other situations as well.

See Toolchain how to get all required packages.

Windows

If you really want to try and use Windows, please do so through WSL (Windows Subsystem for Linux). To do this, install "Fedora Linux" through the windows store and follow the editor specific setup to use WSL.

Toolchain

To start using rust, you will have to install a rust toolchain. To do so, you can follow the instructions on https://www.rust-lang.org/tools/install. The instructions there should be pretty clear, but if you have questions about it we recommend you ask about it in the labs.

To install all required packages for Fedora run the following:

sudo dnf install code rust cargo clang cmake fontconfig-devel

Warning

Do not install

rustcorcargothrough your package manager on Ubuntu, Pop_os!, Mint, Debian or derived linux distributions. Read our notes about these distros here and then come back.Due to their packaging rules, your installation will be horribly out of date and will not work as expected. On Fedora we have verified that installing rust through

dnfdoes work as expected. For other distributions follow the installation instructions above and do not use your package manager. See our notes on Linux.

Editor setup

To use any programming language, you need an editor to type text (programs) into. In theory, this can be any (plain-text) editor.

However, we have some recommendations for you.

CLion/Rust Rover (Jetbrains IDE)

In class, we will most likely be giving examples using Rust Rover. It is the editor some TAs for the course use. To use it you can get a free student-license at https://www.jetbrains.com/community/education/#students

You will have to download the rust plugin for CLion too. This is easy enough (through the settings menu), and CLion might even prompt you for it on installation. If you can't figure it out, feel free to ask a question in the labs.

If using Windows make sure to also follow the wsl specific documentation.

Visual Studio Code

Visual Studio Code (not to be confused with "visual studio") is an admittedly more light-weight editor that has a lot of the functionality CLion has too. In any case, the teachers and TAs have some experience using it so we can support you if things go wrong. You can easily find it online, you will need to install the "rust analyzer" plugin, which is actually written in Rust and is quite nice.

If using Windows make sure to also follow the wsl specific documentation.

Other

Any other editor (vim, emacs, ed) you like can be used, but note that the support you will get from teachers and TAs will be limited if at all. Do this on your own risk. If you're unfamiliar with programming tools or editors, we strongly recommend you use either CLion or VSCode.

Lecture Notes

Week 1

Week 2

Week 3

Week 4

Lecture 1.1

Contents

- Course Information

- Threads

- Data races

- Global variables and Data Race prevention in Rust

- Mutexes

- Mutexes in Rust

- Lock Poisoning

- Sharing heap-allocated values between threads

- Read-Write locks

Course Information

In this lecture we discussed the setup of the course and deadlines too. However, you can already find those on the homepage of this website

Threads

Most modern CPUs have more than one core. Which means that, in theory, a program can do more than a single thing at the same time. In practice this can be done by creating multiple processes or multiple threads within one process. How this works will be explained in lecture 1.2.

For now, you can think of threads as a method with which your program can execute multiple parts of your program at the same time. Even though the program is being executed in parallel, all threads still share the same memory * except* for their stacks1. That means that, for example, a global variable can be accessed by multiple threads.

Data races

This parallelism can cause a phenomenon called a data race. Let's look at the following C program:

static int a = 3;

int main() {

a += 1;

}

And let's now also look at the associated assembly:

main:

push rbp

mov rbp,rsp

mov eax,DWORD PTR [rip+0x2f18] # 404028 <a>

add eax,0x1

mov DWORD PTR [rip+0x2f0f],eax # 404028 <a>

mov eax,0x0

pop rbp

ret

Notice, that after some fiddling with the stack pointer, the += operation consists of three instructions:

- loading the value of

ainto a register (eax) - adding

1to that register - storing the result back into memory

However, what happens if more than one thread were to execute this program at the same time? The following could happen:

| thread 1 | thread 2 |

|---|---|

load the value of a into eax | load the value of a into eax |

add 1 to thread 1's eax | add 1 to thread 2's eax |

store the result in a | store the result in a |

Both threads ran the code to increment a. But even though a started at 3, and was incremented by both threads, the

final value of a if 4. Not 5. That's because both threads read the value of 3, added 1 to that, and both

stored back the value 4.

To practically demonstrate the result of such data races, we can look at the following c program:

#include <stdio.h>

#include <threads.h>

int v = 0;

void count(int * delta) {

// add delta to v 100 000 times

for (int i = 0; i < 100000; i++) {

v += *delta;

}

}

int main() {

thrd_t t1, t2;

int d1 = 1, d2 = -1;

// run the count function with delta=1

thrd_create(&t1, count, &d1);

// run the count function with delta=-1 at the same time

thrd_create(&t2, count, &d2);

thrd_join(t1, NULL);

thrd_join(t2, NULL);

printf("%d\n", v);

}

Since we increment and decrement v 100 000 times, you'd expect the result to be 0. However, that's not the case. You

can run this program yourself to see (just compile it with a compiler that supports C11), but I've run it a couple of

times and these were the results of my 8 runs:

| run 1 | run 2 | run 3 | run 4 | run 5 | run 6 | run 7 | run 8 |

|---|---|---|---|---|---|---|---|

-89346 | 28786 | 23767 | -83430 | -63039 | -15282 | -82377 | -65402 |

You see, the result is always different, and never 0. That's because some of the additions and subtractions are lost

due to data races. We will now look at why this happens.

Global variables and Data Race prevention in Rust

It turns out, sharing memory between threads is often an unsafe thing to do. Generally, there are two ways to share memory between threads. One option we've seen, where multiple threads access a global variable. The other possibility, is that two thread get a pointer or reference to a memory location that is for example on the heap, or on the stack of another thread.

Notice that for example, in the previous c program, the threads could have also modified the delta through the int *

passed in. The deltas for the two threads are stored on the stack of the main thread. But, because the c program never

updates the delta, it only reads the value, sharing the delta is something that's totally safe to do.

Thus, sharing memory between threads can be safe. As long as at most one thread can mutate the memory, all is fine!

In Rust, there's a rule that these mechanics may remind you of. If not, take a look at the lecture notes from Software Fundamentals. A piece of memory can, at any point in time, only be borrowed by a single mutable reference or any number of immutable references - references that cannot change what's stored at the location they reference. These rules make it fundamentally impossible to create a data race involving references to either the heap or another thread's stack.

But what about global variables? Can those still cause data races? Let's try to recreate the C program from above in Rust:

use std::thread; static mut v: i32 = 0; fn count(delta: i32) { for _ in 0..100_000 { v += delta; } } fn main() { let t1 = thread::spawn(|| count(1)); let t2 = thread::spawn(|| count(-1)); t1.join().unwrap(); t2.join().unwrap(); println!("{}", v); }

In Rust, you make a global variable by declaring it static, and we make it mutable, so we can modify it in

count(). thread::spawn creates threads, and .join() waits for the thread to finish. But, if you try compiling

this you will encounter an error:

error[E0133]: use of mutable static is unsafe and requires unsafe function or block

--> src/main.rs:31:9

|

31 | v += delta;

| ^^^^^^^^^^ use of mutable static

|

= note: mutable statics can be mutated by multiple threads:

aliasing violations or data races will cause undefined behavior

The error even helpfully mentions that this can cause data races.

Why then, can you even make a static variable mutable? If any access causes the program not to compile? Well, this

compilation error is actually one we can circumvent. We could put the modification of the global variable in

an unsafe block. We will talk about the exact behaviour of unsafe blocks in lecture 3

That means, we can make this program compile by modifying it like so:

use std::thread; static mut v: i32 = 0; fn count(delta: i32) { for _ in 0..100_000 { // add an unsafe block unsafe { v += delta; } } } fn main() { let t1 = thread::spawn(|| count(1)); let t2 = thread::spawn(|| count(-1)); t1.join().unwrap(); t2.join().unwrap(); // add an unsafe block unsafe { println!("{}", v); } }

Even though the program now compiles, we did introduce the same data race problem as we previously saw in C. This program will rarely give 0 as its result. Is there a way to solve this?

Mutexes

Let's first look how we can solve the original data race problem in C. What you can do to make sure data races do not occur, is to add a critical section. A critical section is a part of the program, in which you make sure that no other threads execute that part at the same time. One way to create a critical section is to use a mutex. A mutex's state can be seen as a boolean. It's either locked, or unlocked. A mutex can safely be shared between threads, and if one thread tries to lock the shared mutex, one of two things can occur:

- The mutex is unlocked. If this is the case, the mutex is immediately locked.

- The mutex is currently locked by another thread. The thread that tries to lock it has to wait.

If a thread has to wait to lock the thread, generally the thread is suspended such that it doesn't consume any CPU resources while it waits. The OS may schedule another thread that is not waiting on a lock.

However, what's important is that this locking operation is atomic. In other words, a mutex really can only be locked by one thread at a time without fear of data races. Let's go back to C and see how we would use a mutex there:

int v = 0;

mtx_t m;

void count(int * delta) {

for (int i = 0; i < 100000; i++) {

// start the critical section by locking m

mtx_lock(&m);

v += *delta;

// end the section by unlocking m. This is very important

mtx_unlock(&m);

}

}

int main() {

thrd_t t1, t2; int d1 = 1, d2 = -1;

// initialize the mutex.

mtx_init(&m, mtx_plain);

thrd_create(&t1, count, &d1);

thrd_create(&t2, count, &d2);

thrd_join(t1, NULL);

thrd_join(t2, NULL);

printf("%d\n", v);

}

The outcome of this program, in contrast to the original program, is always zero. That's because any time v is updated,

the mutex is locked first. If another thread starts executing the same code, it has to wait until the other thread unlocks it,

therefore the two threads can't update v at the same time.

But still, a lot of things can go wrong in this c program. For example, we need to make sure that any time we use v, we lock the mutex. If we forget it once, our program is unsafe. And if we forget to unlock the lock once? Well, then the program may get stuck forever.

Mutexes in Rust

In Rust, the process of using a mutex is slightly different. To start, a mutex and the variable it protects are not

separated. Intead of making a v and an m like in the C program above, we combine the two:

#![allow(unused)] fn main() { let a: Mutex<i32> = Mutex::new(0); }

Here, a is a mutex, and inside the mutex, an integer is stored. However, the storage location of our value within the

mutex is private. That means, you cannot access it from the outside. The only way to read or write the value inside a

mutex is to lock it. This conveniently makes a safety concern we'd have in C impossible: you can never update the

value without locking the mutex.

In Rust, the lock function returns a so-called mutex guard. Let's look at that:

use std::sync::Mutex; fn main() { let a: Mutex<i32> = Mutex::new(0); // locking returns a guard. we'll talk later at what the unwrap is for. let mut guard = a.lock().unwrap(); // the guard is a mutable pointer to the integer inside our mutex // that means we can use it to increment the value, from what was initially 0 *guard += 1; // the scope of the main function ends here. This drops the `guard` // variable, and automatically unlocks the mutex }

The guard both acts as a way to access the value inside the mutex, and as an indicator of how long to lock the mutex. As soon as the guard is dropped (i.e. goes out of scope), it automatically unlocks the mutex that it was associated with.

What may surprise you, is that we never declared a to be mutable. The reason mutability is important in Rust, is to

prevent data races and pointer aliasing. Both of those involve accessing the same piece of memory mutably at the same

time. But a mutex already prevents that: we can only lock a mutex once. That means that since using a mutex already

prevents both mutable aliasing and data races, so we don't really need the mutability rules for mutexes. This is all possible,

because the .lock() function does not need a mutable reference to the mutex. And that allows us to share references to this mutex safely between threads since Rust's rules would prevent us from sharing a mutable reference between threads.

With that knowledge, let's look at the an improved Rust version of the counter program:

use std::thread; use std::sync::Mutex; static v: Mutex<i32> = Mutex::new(0); fn count(delta: i32) { for _ in 0..100_000 { // add an unsafe block *v.lock().unwrap() += delta; } } fn main() { let t1 = thread::spawn(|| count(1)); let t2 = thread::spawn(|| count(-1)); t1.join().unwrap(); t2.join().unwrap(); // add an unsafe block println!("{}", v.lock().unwrap()); }

Lock Poisoning

You may notice that we need to use unwrap every time we lock. That's because lock returns

a Result. Which

means, it can fail. To learn why, we need to consider the case in which a program crashes while it holds a lock.

When a thread crashes (or panics, in Rust language), it doesn't crash other threads, nor will it exit the program. That means, that if another thread was waiting to acquire the lock, it may be stuck forever when the thread that previously had the lock crashed before unlocking it.

To prevent threads getting stuck like that, the lock is "poisoned" when a thread crashes while holding it. From that

moment onward, all other threads that try to lock the same lock, will fail to lock, and instead see the Err() variant of the

Result. And what does unwrap do? It crashes the thread when it sees an Err() variant. In effect, this makes sure that

if one thread crashes while holding the lock, all threads crash when acquiring the same lock. And even though crashing

may not be desired behaviour, it's a lot better than being stuck forever.

If you really want to make sure your threads don't crash when the lock is poisoned, you could handle the error by using matching. But, it's actually not considered bad practice to unwrap lock results.

Sharing heap-allocated values between threads

Sometimes, it's not desirable to use global variables to share information between threads. Instead, you may want to pass a reference to a local variable to a thread. For example, with the counter example we may want multiple pairs of threads to be counting up and down at the same time. But that's not possible when we use a global, since that's shared between all threads.

Let's look at how we may try to implement this:

use std::thread; use std::sync::Mutex; fn count(delta: i32, v: &Mutex<i32>) { for _ in 0..100_000 { *v.lock().unwrap() += delta } } fn main() { // v is a local variable let v = Mutex::new(0); // we pass an immutable reference to it to count // note: this is possible since locking doesn't require // a mutable reference let t1 = thread::spawn(|| count(1, &v)); let t2 = thread::spawn(|| count(-1, &v)); t1.join().unwrap(); t2.join().unwrap(); println!("{}", v.lock().unwrap()); }

But you'll find that this doesn't work. That's because it's possible for sub-threads to run for longer than the main

thread. It could be that main() has already returned and deallocated v before the counting has finished.

As it turns out, this isn't actually possible because we join the threads before that happens. But this is something Rust cannot track. Scoped threads could solve this, but let's first look into how to solve this without using scoped threads.

What we could do is allocate v on the heap. The heap will be around for the entire duration of the program, even

when main exits.

In Software Fundamentals

we have learned that we can do this by putting our value inside a Box.

fn main() { let v = Box::new(Mutex::new(0)); // rest }

But Box doesn't allow us to share its content between threads (or even two functions) because that would make it

impossible to figure out when to deallocate the value. If you think about it, if we share the value between the two

threads, which thread should free the value? The main thread potentially can't do it if it exited before the threads

did.

Rc and Arc

As an alternative to Box, there is the Rc type. It also allocates its contents on the heap, but it allows us to

reference those contents multiple times. So how does it know when to deallocate?

Rc stands for "reference counted". Reference counting means that every time we create a new reference to the Rc,

internally a counter goes up. On the flip side, every time we drop a reference, the counter goes down. When the counter reaches

zero, nothing references the Rc any more and the contents can safely be deallocated.

Let's look at an example:

use std::rc::Rc; fn create_vectors() -> (Rc<Vec<i32>>, Rc<Vec<i32>>) { // here we create a reference counted value with a vector in it // The reference count starts at 1, since a references the Vec let a = Rc::new(vec![1, 2, 3]); // here we clone a. Cloning an Rc doesn't clone the contents. // Instead, a new reference to the same Vec is created. Because // we create 2 more references here, at the end the reference // count is `3` let ref_1 = a.clone(); // doesn't clone the vec! only the reference! let ref_2 = a.clone(); // doesn't clone the vec! only the reference! // so here, the reference count is 3 // but only ref_1 and ref_2 are returned. Not a. // Instead, a is dropped at the end of the function. // But dropping a won't deallocate the Vector since the // reference count is still 2. (ref_1, ref_2) } fn main() { // here we put ref_1 and ref_2 in a and b. // However, both a and b refer to the same vector, // with a reference count of 2. let (a, b) = create_vectors(); // Both are the same vector println!("{:?}", a); println!("{:?}", b); // here, finally both a and b are dropped. This makes the // reference count first go down to 1, (when a is dropped) // and then to 0. When b is dropped, it notices the reference // count reaching zero and it frees the vector since it is now. // sure nothing else references the vector anymore }

To avoid data races, an Rc does not allow you to mutate its internals. If you want to anyway, we could use a Mutex again

since a Mutex allows us to modify an immutable value by locking.

Note that an

Rcis slightly less efficient than using normal references orBoxes. That's because every time you clone anRcor drop anRc, the reference count needs to be updated.

Send and Sync

Let's try to write the original counter example with a reference counted local variable:

use std::rc::Rc; use std::sync::Mutex; use std::thread; fn count(delta: i32, v: Rc<Mutex<i32>>) { for i in 0..100_000 { *v.lock().unwrap() += delta } } fn main() { // make an Rc let v = Rc::new(Mutex::new(0)); // clone it twice for our two threads let (v1, v2) = (v.clone(), v.clone()); // start the counting as we have done before let t1 = thread::spawn(|| count(1, v1)); let t2 = thread::spawn(|| count(-1, v2)); t1.join().unwrap(); t2.join().unwrap(); println!("{}", v.lock().unwrap()); }

You will find that this still does not compile! As it turns out, it is not safe for us to send an Rc from one thread

to another or to use one concurrently from multiple threads. That's because every time an Rc is cloned or dropped, the

reference counter has to be updated. If we make mistakes with that, we might free the contained value too early, or not

at all. But if we update the reference count from multiple threads, we could create a data race again. We can solve this

by substituting our Rc for an Arc.

Just like an Rc is slightly less efficient than a Box, an Arc is slightly less efficient than an Rc. That's because

when you clone an Arc, the reference count is atomically updated. In other words, it uses a critical section to prevent

data races (though it doesn't actually use a mutex, it's a bit smarter than that).

use std::sync::{Arc, Mutex}; use std::thread; fn count(delta: i32, v: Arc<Mutex<i32>>) { for i in 0..100_000 { *v.lock().unwrap() += delta } } fn main() { // make an Rc let v = Arc::new(Mutex::new(0)); // clone it twice for our two threads let (v1, v2) = (v.clone(), v.clone()); // start the counting as we have done before let t1 = thread::spawn(|| count(1, v1)); let t2 = thread::spawn(|| count(-1, v2)); t1.join().unwrap(); t2.join().unwrap(); println!("{}", v.lock().unwrap()); }

And finally, our program works!

But there is another lesson to be learned here: Apparently, some datatypes can only work safely when they are

contained within a single thread. An Rc is an example of this, but there's also Cells and RefCells, and

for example, you also can't really move GPU rendering contexts between threads.

In Rust, there are two traits (properties of types): Send and Sync that govern this.

- A type has the

Sendproperty, if it's safe for it to be sent to another thread - A type has the

Syncproperty, if it's safe for it to live in one thread, and be referenced and read from in another thread.

An Rc is neither Send nor Sync, while an Arc is both as long as the value in the Arc is also Send and Sync.

Send and Sync are automatically implemented for almost any type. A type only doesn't automatically get

these properties if the type explicitly opts out of being Send or Sync, or if one of the members of the type

isn't Send or Sync.

For example, an integer is both Send and Sync, and so is a struct only containing integers.

But an Rc explicitly opts out of being Send and Sync:

#![allow(unused)] fn main() { // from the rust standard library impl<T: ?Sized> !Send for Rc<T> {} impl<T: ?Sized> !Sync for Rc<T> {} }

And a struct with in it an Rc:

#![allow(unused)] fn main() { use std::rc::Rc; struct Example { some_field: Rc<i64>, } }

is also not Send and not Sync.

It is possible for a type to be only Send or only Sync if that's required.

Scoped threads

In Rust 1.63, a new feature was introduced named scoped threads. This allows you to tell the compiler that threads must terminate before the end of a function. And that means, it becomes safe to share local variables with threads.

Let us, for the last time, look at the counter example, but implement it without having to allocate a reference counted type on the heap:

use std::sync::Mutex; use std::thread; fn count(delta: i32, v: &Mutex<i32>) { for i in 0..100_000 { *v.lock().unwrap() += delta } } fn main() { let v = Mutex::new(0); // create a scope thread::scope(|s| { // within the scope, spawn two threads s.spawn(|| count(1, &v)); s.spawn(|| count(-1, &v)); // at the end of the scope, the scope automatically // waits for the two threads to exit }); // here the threads have *definitely* exited. // and `v` is still alive here. println!("{}", v.lock().unwrap()); }

Because the scope guarantees the threads to exit before the main thread does, it's safe to borrow variables from the local scope of the main function. This means that allocating on the heap becomes unnecessary.

Read-Write locks

In some cases, a Mutex is not the most efficient locking primitive to use. Usually that's when a lock is read way more

often than it's written to. Any time the code wants to read from a Mutex, it has to lock it, disallowing any other

threads to either read or write to it. But when a thread just want to read from a

Mutex, it's perfectly safe for other threads to also read from it at the same time. This is the problem that

an std::sync::RwLock solves. It's like a Mutex, but has two different lock functions. read (which returns an

immutable reference to its internals) and write, which works like lock on mutexes. A RwLock allows multiple

threads to call read at the same time. You can find more documentation at

them here.

-

Technically there are also thread-local variables which are global, but also not shared between threads. Instead, every thread will get its own copy of the variable. ↩

Lecture 1.2

Contents

Programs, processes and threads

Programs

Before we continue talking about threads, it's useful to talk about some definitions. Namely, what exactly is the difference between a program, a process and a thread.

Let's start with programs. Programs are sequences of instructions, often combined with static data. Programs usually live in files, sometimes uncompiled (source code), sometimes compiled. Usually, when a language directly executes source code, we call this an interpreted language. Interpreted language usually rely on some kind of runtime that can execute the source code. This runtime is usually a compiled program.

When a program is stored after it is compiled, we usually call that a "binary". The format of a binary may vary. When there's an operating system around, it usually wants binaries in a specific format. For example, on Linux, binaries are usually stored in the ELF format. However, especially if you are running code on a system without an operating system, the compiled program is sometimes literally a byte-for-byte copy of the representation of that program in the memory of the system that will run the program. From now on, when we talk about a program running under an operating system, you can assume that that operating system is Linux. The explanation is roughly equivalent for other operating systems, but details may vary.

Processes

When you ask Linux to run a program, it will create a process. A process is an instance of a program. This instantiation is called loading a program, and the operating system will initialize things like the stack (pointer) and program counter such that the program can start running. Each process has its own registers, own stack, and own heap.

Each process can also assume that it owns the entirety of memory. This is of course not true, there are other processes also running, also using parts of memory. A system called virtual memory makes this possible. Every time a process tries to access a memory location, the location (known as the virtual address) is translated to a physical address. Two processes can access the same virtual address, and yet this translation makes sure that they actually access two different physical addresses. Note that generally, an operating system is required to do this translation.

A process can fork. When it does this, the entire process is cloned. All values on the stack and heap are duplicated1, and we end up with two processes. After a fork, one of the two resulting processes can start doing different things. This is how all2 processes are created after the first process (often called the init process). The operating starts the first process, and it then forks (many times) to create all the processes on the system.

Because each process has its own memory, if one forked process writes to a global variable, the other process will not see this update. It has its own version of the variable.

Threads

A thread is like a process within a process. When a new thread is created, the new thread gets its own stack and own registers. But, unlike with forking, the memory space stays shared. That means that global variables stay shared, references from one thread are valid in another thread, and data can easily be exchanged by threads. Last lecture we have of course seen that this is not always desirable, for example, when this causes data races.

Even though threads semantically are part of a process, Linux actually treats processes and threads pretty much as equals. The scheduler, about which you'll hear about more after this, schedules both processes and threads.

Scheduler

Due to forking, or spawning threads, the operating system may need to manage tens, or hundreds of processes at once. Though modern computers can have quite large numbers of cores, usually not hundreds. To create the illusion of parallelism, the operating system uses a trick: it quickly alternates the execution of processes.

After a process runs for a while (on Linux this time is variable), the register state of the process are saved in memory, allowing a different process to run. If at some point the original process is chosen again to run, the registers are reverted to the same values they were when it was scheduled out. The process won't really notice that it didn't run for a while.

Even though the scheduler tries to be fair in the way it chooses processes to run, processes can communicate with the scheduler in several ways to modify how it schedules the process. For example:

- When a process sleeps for a while (

see

std::thread::sleep), the scheduler can immediately start running another process. The scheduler could even try to run the process again as close as possible to the sleep time requested. - A process can ask the scheduler to stop executing it, and instead run another thread or process. This can be useful

when a process has to wait in a loop for another thread to finish. Then it can give the other thread more time run.

See

std::thread::yield_now - Processes can have different priorities, in Linux this is called the niceness of a process.

- Sometimes, using a synchronization primitive may communicate with the scheduler to be more efficient. For example, when a thread is waiting for a lock, and the lock is released, the scheduler could schedule that thread next.

- When a process has to wait for an IO operation to run, it can ask the operating to system to be run again when the IO operation has completed. Rust can do this by using tokio for example.

Concurrency vs Parallelism

Sometimes, the words concurrency and parallelism are used interchangeably. However, their meanings are not the same. The difference is as follows: concurrency can exist on a system with a single core. It's simply the illusion of processes running at the same time. However, it may indeed be just an illusion. By alternating the processes, they don't run at the same time at all.

In contrast, parallelism is when two processes are indeed running at the same time. This is therefore only possible on a system with more than one core. However, even though not all system have parallelism, concurrency can still cause data races.

For example, let's look at the example from last lecture:

static int a = 3;

int main() {

a += 1;

}

What could happen on a single core machine where threads are run concurrently is the following:

- Thread 1 reads

aintoaregister - Thread 1 increments the register

- Thread 1 is scheduled out, and thread 2 is allowed to run

- Thread 2 read

ainto a register - Thread 2 increments the register

- Thread 2 writes the new value (of 4) back to memory

- Thread 2 is scheduled out, and thread 1 is allowed to run

- Thread 1 writes the new value (also 4) back to memory

Both threads have tried to increment a here, and yet the value is only 1 higher.

Channels

Up to now, we've looked at how we can share memory between threads. We often want this so that threads can coordinate or communicate in some way. You could call this communication by sharing memory. However, a different strategy sometimes makes sense. This started with C. A. R. Hoare's communicating sequential processes, but the Go programming language, a language in which concurrency plays a central role, likes to call this "sharing memory by communicating".

What it boils down to, is the following: sometimes it's very natural, or more logical, not to update one shared memory location, but instead to pass messages around. Let's look at an example to illustrate this:

fn some_expensive_operation() -> &'static str { "🦀" } use std::sync::mpsc::channel; use std::thread; fn main() { let (sender, receiver) = channel(); // Spawn off an expensive computation thread::spawn(move || { let result = some_expensive_operation(); sender.send(result).unwrap(); }); // Do some useful work for awhile // Let's see what that answer was println!("{}", receiver.recv().unwrap()); }

This example comes from the rust mpsc documentation.

Here we want to do some expensive operation, which may take a long time, and do it in the background. We spawn a thread to do it, and then want to do something else before it has finished. However, we don't know exactly how long the expensive operation will take. It may take shorter than doing some useful work, or longer.

So we use a channel. A channel is a way to pass messages between threads. In this case, the message is the result of the

expensive operation. When the operation has finished, the result is sent over the channel, at which point the useful work

may or may not be done already. If it wasn't done yet, the message is simply sent and the thread can exit. When finally

the receiver calls .recv() it will get the result from the channel, since it was already done. However, if the useful

work is done first, it will call .recv() early. However, there's no message yet. If this is the case, the receiving

end will wait until there is a message.

A channel works like a queue. Values are inserted on one end, and removed at the other end. But, with a channel, the ends are in different threads. The threads can use this to update each other about calculations that are performed. This turns out to be quite efficient, sometimes locking isn't even required to send messages. You can imagine that for example when there are many items in the queue, and one thread pops from one end but another push on the other, the threads don't even interfere with each other since they write and read data far away from each other. In reality, it's a bit more subtle, but this may give you some intuition on why this can be quite efficient.

Multi consumer and multi producer

You may have noticed that in Rust, channels are part of the std::sync::mpsc module. So what does mpsc mean?

It stands for multi-producer-single-consumer. Which is a statement about the type of channel it provides. When

you create a channel, you get a sender and a receiver, and these have different properties.

First, both senders are Send but not Sync. That means, they can be sent or moved to other threads, but they cannot be used at the same time from different threads. That means that if a thread wants to use a sender or receiver, it will need ownership of it.

Importantly, you cannot clone receivers. That effectively means that only one thread can have the receiver. On the other hand, you can clone senders. If a sender is cloned, it still sends data to the same receiver. Thus, it's possible that multiple threads send messages to a single consumer. That's what the name comes from: multi-producer single-consumer.

But that's not the only style of channel there is. For example, the crossbeam library (has all kinds of concurrent data structures) provides an mpmc channel. That means, there can be multiple consumers as well. However, every message is only sent to one of the consumers. Instead, multiqueue provides an mpmc channel where all messages are sent to all receivers. This is sometimes called a broadcast queue.

Bounded channels

In rust, by default, channels are unbounded. That means, senders can send as many messages as they like before a receiver needs to take messages out of the queue. Sometimes, this is not desired. You don't always want producers to work ahead far as this may fill up memory quickly, or maybe by working ahead the producers are causing the scheduler not to schedule the consumer(s) as often.

This is where bounded channels are useful. A bounded channel has a maximum size. If a sender tries to insert more items than this size, they have to wait for a receiver to take out an item first. This will block the sending thread, giving a receiving thread time to take out items. In effect, the size bound on the channel represents a maximum on how much senders can work ahead compared to the receiver.

A special case of this is the zero-size bounded channel. Here, a sender can never put any message in the channel's buffer. However, this is not always undesired. It simply means that the sender will wait until a sender can receive the message, and then it will pass the message directly to the receiver without going into a buffer. The channel now works like a barrier, forcing threads to synchronize. Both threads have to arrive to their respective send/recv points before a message can be exchanged. This causes a lock-step type behaviour where the producer produces one item, then waits for the consumer to consume one item.

Bounded channels are also sometimes called synchronous channels.

Atomics

Atomic variables are special variables on which we can perform operations atomically. That means that we can for example increment a value safely in multiple threads, and not lose counts like we saw in the previous lecture. In fact, because operations on atomics are atomic, we don't even need to make them mutable to modify them. This almost sounds too good to be true, and it is. Not all types can be atomic, because atomics require hardware support. Additionally, operating on them can be significantly slower than operating on normal variables.

I ran some benchmarks on my computer. None of them actually used multithreading, so you wouldn't actually need a mutex or an atomic here. However, it does show what the raw performance penalty of using atomics and mutexes is compared to not having one. When there is contention over the variable, speeds may differ a lot, but of course, not using either an atomic or mutex then isn't an option anymore. Also, each reported time is actually the time to do 10 000 increment operations, and in the actual benchmarked code I made sure that nothing was optimized out by the compiler artificially making code faster.

All tests were performed using criterion, and the times are averaged over many runs. Atomics seem roughly 5x slower than not using them, but about 2x faster than using a mutex.

#![allow(unused)] fn main() { // Collecting 100 samples in estimated 5.0037 s (1530150 iterations) // Benchmarking non-atomic: Analyzing // non-atomic time: [3.2609 µs 3.2639 µs 3.2675 µs] // Found 5 outliers among 100 measurements (5.00%) // 3 (3.00%) high mild // 2 (2.00%) high severe // slope [3.2609 µs 3.2675 µs] R^2 [0.9948771 0.9948239] // mean [3.2696 µs 3.2819 µs] std. dev. [20.367 ns 42.759 ns] // median [3.2608 µs 3.2705 µs] med. abs. dev. [10.777 ns 21.822 ns] fn increment(n: &mut usize) { *n += 1; } // Collecting 100 samples in estimated 5.0739 s (308050 iterations) // Benchmarking atomic: Analyzing // atomic time: [16.387 µs 16.408 µs 16.430 µs] // Found 4 outliers among 100 measurements (4.00%) // 4 (4.00%) high mild // slope [16.387 µs 16.430 µs] R^2 [0.9969321 0.9969237] // mean [16.385 µs 16.415 µs] std. dev. [63.884 ns 89.716 ns] // median [16.367 µs 16.389 µs] med. abs. dev. [33.934 ns 68.379 ns] fn increment_atomic(n: &AtomicUsize) { n.fetch_add(1, Ordering::Relaxed); } // Collecting 100 samples in estimated 5.1363 s (141400 iterations) // Benchmarking mutex: Analyzing // mutex time: [36.148 µs 36.186 µs 36.231 µs] // Found 32 outliers among 100 measurements (32.00%) // 16 (16.00%) low severe // 4 (4.00%) low mild // 6 (6.00%) high mild // 6 (6.00%) high severe // slope [36.148 µs 36.231 µs] R^2 [0.9970411 0.9969918] // mean [36.233 µs 36.289 µs] std. dev. [116.84 ns 166.02 ns] // median [36.267 µs 36.288 µs] med. abs. dev. [32.770 ns 75.978 ns] fn increment_mutex(n: &Mutex<usize>) { *n.lock() += 1; } }For atomics, I used the relaxed memory ordering here since the actual ordering really doesn't matter, as long as all operations occur. I did test with different orderings, and it made no difference here.

Atomics usually work using special instructions or instruction modifiers, which instruct the hardware to temporarily lock a certain memory address while an operation, like an addition, takes place. Often, this locking actually happens on the level of the memory caches. Temporarily, no other cores can update the values in the cache. Do note that this is an oversimplification, atomics are quite complex.

Operations on Atomics

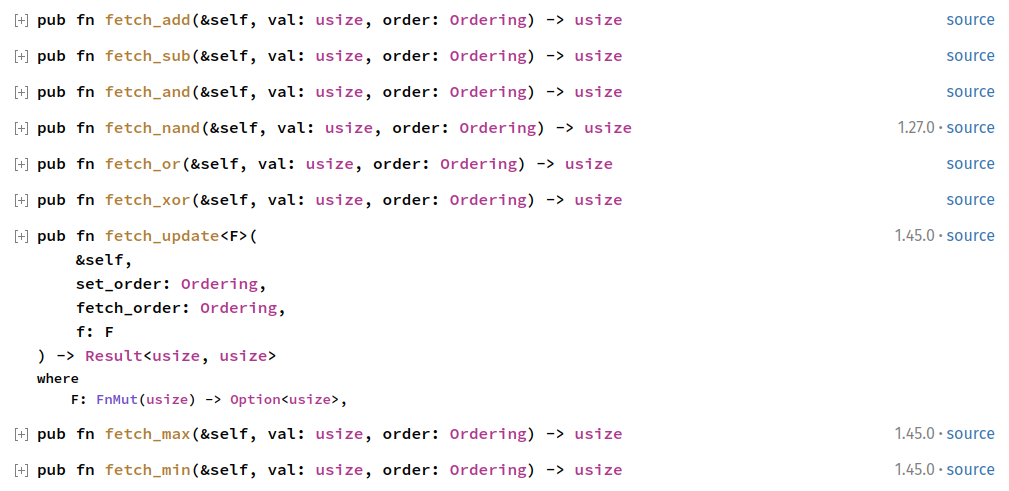

To work with atomics variables, you often make use of some special operations defined on them. First, there are the "fetch" operations.

They perform an atomic fetch-modify-writeback sequence. For example, the .fetch_add(n) function on atomic integers, adds n to an atomic

integer by first fetching the value, then adding n to it, and then writing back (all atomically). There is a large list of such fetch operations.

For example, atomic integers define the following:

The other common operation on atomics is the compare-exchange operation. Let's look at a little real-world example. Let's implement a so-called spinlock (like a custom mutex, that simply waits in an infinite loop until the lock is available). It's quite a bad design, and inefficient, but it works nonetheless:

#![allow(unused)] fn main() { struct SpinLock<T> { locked: AtomicBool, // UnsafeCells can magically make immutable things mutable. // This is unsafe to do and requires us to use "unsafe" code. // But if we ensure we lock correctly, we can make it safe. value: UnsafeCell<T> } impl<T> SpinLock<T> { pub fn lock(&self) { while self.locked.compare_exchange(false, true).is_err() { std::thread::yield_now(); } // now it's safe to access `value` as mutable, since // we know the mutex is locked and we locked it self.locked.store(false); } } }

So what's happening here? locked is an atomic boolean. That means, that we can perform atomic operations on it.

When we want to lock the mutex (to access the value as mutable), we want to look if the lock is free. We use a compare exchange operation for this.

Compare exchange looks if the current value of an atomic is equal to the first argument, and if it is, it sets it equal to the second argument. So what's

happening here is that the compare_exchange looks if the value of locked is false (unlocked), and if so, set it to locked. If the value of locked was

indeed false, we just locked it and can continue. We know for sure we are the only thread accessing the value.

However, if we failed to do the exchange, another thread must have locked the lock already. In that case (when the compare_exchange returned an error), we execute

the loop body and retry again and again, until at some point another thread unlocks the mutex and the compare_exchange does succeed. The yield_now function makes

this all a bit more efficient, it tells the scheduler to give another thread some time. That increases the chance that in the next loop cycle the lock is unlocked.

Orderings

Because atomics can have side effects (in other threads for example), without needing to be mutable, the compiler cannot make certain assumptions about them automatically. Let's study the following example:

#![allow(unused)] fn main() { let mut x = 1; let y = 3; x = 2; }

The compiler may notice that the order of running x and y does not matter, and may reorder it to this:

#![allow(unused)] fn main() { let mut x = 1; x = 2; let y = 3; }

Or even this:

#![allow(unused)] fn main() { let mut x = 3; let y = 3; }

let y = 3 does not touch x, so the value of x is not actually important at that moment. However, with atomics, ordering

can be very important. When certain operations are reordered, compared to other operations, your logic may break. Let's say you

have a program like the following (this is pseudocode, not rust. All variables are atomic and can influence other threads):

#![allow(unused)] fn main() { // for another thread to work properly, v1 and v2 may // *never* be true at the same time v1 = false; v2 = true; // somewhere in the code v2 = false; v1 = true; }

Since between v2 = false and v1 = true, nothing uses either v1 or v2, the compiler may assume that setting

v1 and v2 can be reordered to what's shown below. Maybe that's more efficient.

#![allow(unused)] fn main() { // for another thread to work properly, v1 and v2 may // *never* be true at the same time v1 = false; v2 = true; // somewhere in the code v1 = true; // here, both v1 and v2 are true!!!!! v2 = false; }

However, since atomics may be shared between threads, another thread may briefly observe both v1 and v2 to be true

at the same time!

Therefore, when you update an atomic in Rust (and in other languages too, this system is pretty much copied from c++), you also need to specify what the ordering of that operation is. And not just to prevent the compiler reordering, some CPUs can actually also reorder the execution of instructions, and the compiler may need to insert special instructions to prevent this. Let's look on how to do that:

First let's look at the Relaxed ordering:

use std::sync::atomic::{Ordering, AtomicUsize}; fn main() { let a = AtomicUsize::new(0); let b = AtomicUsize::new(1); // stores the value 1 with the relaxed ordering a.store(1, Ordering::Relaxed); // adds 2 to the value in a with the relaxed ordering b.fetch_add(2, Ordering::Relaxed); }

Relaxed sets no requirements on the ordering of the store and the add. That means, that other threads may actually

see b change to 2 before they see a change to 1.

Memory ordering on a single variable (a single memory address) is always sequentially consistent within one thread. That means that with

#![allow(unused)] fn main() { let a = AtomicUsize::new(0); a.store(1, Ordering::Relaxed); a.fetch_add(2, Ordering::Relaxed); }Here the value of

awill first be 0, then 1 and then 3. But if the store and add were reordered, you may expect to see first 0, then 2 and then 3, suddenly the value 2 appears. However, this is not the case. Because both variables operate on the same variable, the operations will be sequentially consistent, and the variableawill never have the value 2.Orderings are only important to specify how the ordering between updates two different variables should appear to other threads, or how updates to the same variable in two different threads should appear to third threads.

This ordering is pretty important for the example above with the spinlock. Both the store and the compare_exchange take an ordering. In fact,

compare exchange actually takes two orderings (but the second ordering parameter can almost always be Relaxed.)

#![allow(unused)] fn main() { use std::sync::atomic::{AtomicBool, Ordering::*}; use std::cell::UnsafeCell; struct SpinLock<T> { locked: AtomicBool, // UnsafeCells can magically make immutable things mutable. // This is unsafe to do and requires us to use "unsafe" code. // But if we ensure we lock correctly, we can make it safe. value: UnsafeCell<T> } impl<T> SpinLock<T> { pub fn lock(&self) { while self.locked.compare_exchange(false, true, Relaxed, Relaxed).is_err() { std::thread::yield_now(); } // now it's safe to access `value` as mutable, since // we know the mutex is locked and we locked it self.locked.store(false, Relaxed); } } }

This code does not what we want it to do! If there are two threads (a and b) that both try to get this lock, and a third thread (c) currently holds the lock. It's possible that thread c unlocks the lock, and then both a and b at the same time see this, and both lock the lock.

To change this, we can use the SeqCst ordering. SeqCst makes sure that an operation appears sequentially consistent to other operations in other threads.

So when an operation X is SeqCst, all previous operations before X will not appear to other threads as happening after X, and all future operations

won't appear to other threads as happening before X. This is true, even if some of those other operations are not SeqCst. So for the spinlock, c will unlock

the lock, then, for example a could be first with trying to lock the lock. It will stop b from accessing the variable until it has completed the update.

And when a has updated the variable, the lock is locked and b will have to wait until the lock is unlocked again.

In general,

SeqCstshould be your default memory ordering. BecauseSeqcCstgives stronger guarantees to the ordering operations, it may make your program slightly slower than when you useRelaxed. However, even using sequentially consistent atomics will be a lot faster than using mutexes. AndSeqCst` will never accidentally produce the wrong result. The worst kind of bug is one where due to a wrong ordering, your program makes a mistake once in a million executions. That's almost impossible to debug.

For an actual spinlock, you'll actually not see SeqCst used often. Instead, there are two different orderings (Acquire and

Release, often used in pairs) which provide fever guarantees than SeqCst, but enough to implement these kinds of locks. Their

names actually come from what you use to implement a lock. To acquire a lock, you use Acquire ordering, and to release (unlock) a lock,

you use Release ordering. Acquire and Release operations form pairs, specifically ordering those correctly.

This can make code slightly faster.

Actually using different orderings can be quite complicated. If you want to know more, there have been loads of scientific papers about this. This one for example. And the Rust and C++ documentation about it aren't bad at all either.

The final spinlock implementation is as follows. Note again, that making a lock like this is often not such a good choice, and you should almost always use the one from the standard library.

#![allow(unused)] fn main() { use std::sync::atomic::{AtomicBool, Ordering::*}; use std::cell::UnsafeCell; struct SpinLock<T> { locked: AtomicBool, // UnsafeCells can magically make immutable things mutable. // This is unsafe to do and requires us to use "unsafe" code. // But if we ensure we lock correctly, we can make it safe. value: UnsafeCell<T> } impl<T> SpinLock<T> { pub fn lock(&self) { while self.locked.compare_exchange(false, true, Acquire, Relaxed).is_err() { std::thread::yield_now(); } // now it's safe to access `value` as mutable, since // we know the mutex is locked and we locked it self.locked.store(false, Release); } } }

After you have read this lecture, you are ready to work on assignment 1

Resources

-

On linux, values aren't actually cloned immediately. Only when one process modifies a value in a page, only that page will be cloned. Unmodified pages will stay shared to save memory. ↩

-

That's not entirely true. Operating systems have evolved, and new system calls exist now that optimize for example the common case in which a

forksystem call is immediately followed by anexecsystem call. ↩

Lecture 2

This lecture can be seen as a summary of the Rust performance book. Although this lecture is independently created from that book, the Rust performance book is a good resource, and you will see many of the concepts described here come back in-depth there. These lecture notes strictly follow the live lectures, so we may skip one or two things that may interest you in the Rust performance book. We highly recommend you to use it when doing Assignment 2

Contents

- Benchmarking

- Profiling

- What does performance mean?

- Making code faster by being clever

- Calling it less

- Making existing programs faster

- Resources

Benchmarking

Rust is often regarded as quite a performant programming language. Just like C and C++, Rust code is compiled into native machine code, which your processor can directly execute. That gives Rust a leg up over languages like Java or Python, which require a costly runtime and virtual machine in which they execute. But having a fast language certainly doesn't say everything.

The reason is that the fastest infinite loop in the world is still slower than any program that terminates. That's a bit hyperbolic of course, but simply the fact that Rust is considered a fast language, doesn not mean that any program you write in it is immediately as fast as it can be. In these notes, we will talk about some techniques to improve your Rust code and how to find what is slowing it down.

Initially, it is important to learn about how to identify slow code. We can use benchmarks for this. A very useful tool to

make benchmarks for rust code is called criterion.

Criterion itself has lots of documentation on how to use it. Let's look at a little example from the documentation.

Below, we benchmark a simple function that calculates Fibonacci numbers:

use criterion::{black_box, criterion_group, criterion_main, Criterion};

fn fibonacci(n: u64) -> u64 {

match n {

0 => 1,

1 => 1,

n => fibonacci(n-1) + fibonacci(n-2),

}

}

pub fn fibonacci_benchmark(c: &mut Criterion) {

c.bench_function("benchmarking fibonacci", |b| {

// if you have setup code that shouldn't be benchmarked itself

// (like constructing a large array, or initializing data),

// you can put that here

// this code, inside `iter` is actually measured

b.iter(|| {

// a black box disables rust's optimization

fibonacci(black_box(20))

})

});

}

criterion_group!(benches, fibonacci_benchmark);

criterion_main!(benches);With cargo bench you can run all benchmarks in your project. It will store some data in your target folder to record

what the history of benchmarks is that you have ran. This means that if you change your implementation (of the Fibonacci

function in this case), criterion will tell you whether your code has become faster or slower.

It's important to briefly discuss the black_box. Rust is quite smart, and sometimes optimizes away the code that you

wanted to run!

For example, maybe Rust notices that the fibonacci function is quite simple and that fibonacci(20) is simply 28657.

And because the Fibonacci function has no side effects (we call such a function "pure"), it may just insert at compile time the

number 28657 everywhere you call fibonacci(20).

That would mean that this benchmark will always tell you that your code runs in pretty much 0 seconds. That'd not be ideal.

The black box simply returns the value you pass in. However, it makes sure the rust compiler cannot follow what is going

on inside. That means, the Rust compiler won't know in advance that you call the fibonacci function with the value 20,

and also won't optimize away the entire function call. Using black boxes is pretty much free, so you can sprinkle them

around without changing your performance measurements significantly, if at all.

Criterion tells me that the example above takes roughly 15µs on my computer.

It is important to benchmark your code after making a change to ensure you are actually improving the performance of your program.

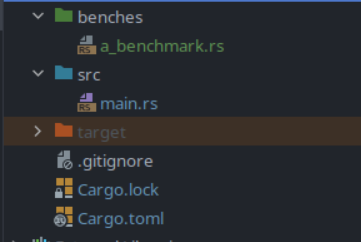

You usually put benchmarks in a folder next to

srccalledbenches, just like how you would put integration tests in a folder calledtest. Cargo understands these folders, and when you put rust files in those special folders, you can import your main library from there, so you can benchmark functions from them.

Profiling

Profiling your code is, together with benchmarking, is one of the most important tools

for improving the performance of your code. In general, profiling will show you where in your

program you spend the most amount of time. However, there are also more specific purpose profiling tools

like cachegrind which can tell you about cache hits amongst other things, some of these are contained with the valgrind program.

You can find a plethora of profilers in the rust perf book.

Profiling is usually a two-step process where you first collect data and analyse it afterwards.

Because of the data collection it is beneficial to capture program debug information,

however this is, by default, disabled for Rust's release profile. To enable this and to thus capture

more useful profiling data add the following to your Cargo.toml:

[profile.release]

debug = true

Afterwards you can either use the command line perf tool or your IDE's integration,

specifically CLion has great support for profiling built-in1.

perf install guide

We would like to provide an install guide, but for every linux distro this will be different

It's probably faster to Google "How to install perf for (your distro)" than to ask the TA's

If using the command line you can run the following commands, to profile your application:

cargo build --release

perf record -F99 --call-graph dwarf -- ./target/release/binary

Afterwards you can view the generated report in a TUI (Text-based User Interface) using (press '?' for keybinds):

perf report --hierarchy

Using this data you can determine where your program spends most of its time, with that you can look at those specific parts in your code and see what you can improve there.

You can also try to play around with this on your Grep implementation of assignment one,

to get a feel of how it works (e.g. try adding a sleep somewhere).

Before running it on the bigger projects like that of assignment 2.

Important for users of WSL1: perf does not properly work on WSL1, you can check if you run WSL1 by executing

wsl --list --verbose, if this command fails you are for sure running WSL 1, otherwise check the version column, if it says1for the distro you are using, you can upgrade it usingwsl --set-version (distro name) 2.

For some people it might be easier to use cargo flamegraph, you can install this using cargo install flamegraph.

This will create an SVG file in the root folder which contains a nice timing graph of your executable.

What does performance mean?

So far, we've only talked about measuring the time performance of a program. This is of course not the only measure of performance. For example, you could also measure the performance of a program in memory usage. In these lecture notes we will mostly talk about performance related to execution speed, but this is always a tradeoff. Some techniques which increase execution speed also increase program size. Actually, decreasing th memory usage of a program can sometimes yield speed increases. We will briefly talk about this throughout these notes, for example in the section about optimization levels we will also mention optimizing for code size.

Making code faster by being clever

The first way to improve performance of code, which is also often the best way, is to be smarter (or rely on the smarts of others). For example, if you "simply" invent a new algorithm that scales linearly instead of exponentially this will be a massive performance boon. In most cases, this is the best way to improve the speed of programs, especially when the program has to scale to very large input sizes. Some people have been clever and have invented all kinds of ways to reduce the complexity of certain pieces of code. However, we will not talk too much about this here. Algorithmics is a very interesting subject, and we many dedicated courses on it here at the TU Delft.

In these notes, we will instead talk about more general techniques you can apply to improve the performance of programs. Techniques where you don't necessarily need to know all the fine details of the program. So, let us say we have a function that is very slow. What can we do to make it faster? We will talk about two broad categories of techniques. Either:

- You make the function itself faster

- You try to call the function (or nested functions) less often. If the program does less work, it will often also take less time.

Calling it less

Let's start with the second category, because that list is slightly shorter.

Memoization

Let's look at the Fibonacci example again. You may remember it from the exercises from Software Fundamentals. If we want

to find the 100th Fibonacci number, it will probably take longer than your life to terminate. That's because to

calculate

fibonacci(20), first, we will calculate fibonacci(19) and fibonacci(18). But actually, calculating fibonacci(19)

will

again calculate fibonacci(18). Already, we're duplicating work. Our previous fibonacci(20) example will

call fibonacci(0)

a total of 4181 times.

Of course, we could rewrite the algorithm with a loop. But some people really like the recursive definition of the Fibonacci function because it's quite concise. In that case, memoization can help. Memoization is basically just a cache. Every time a function is called with a certain parameter, an entry is made in a hashmap. The key of the entry is the parameters of the function, and the value is the result of the function call. If the function is then again called with the same parameters, instead of running the function again, the memoized result is simply returned. For the fibonacci function that looks something like this:

use std::collections::HashMap; fn fibonacci(n: u64, cache: &mut HashMap<u64, u128>) -> u128 { // let's see. Did we already calculate this value? if let Some(&result) = cache.get(&n) { // if so, return it return result; } // otherwise, let's calculate it now let result = match n { 0 => 0, 1 => 1, n => fibonacci(n-1, cache) + fibonacci(n-2, cache), }; // and put it in the cache for later use cache.insert(n, result); result } fn main() { let mut cache = HashMap::new(); println!("{}", fibonacci(100, &mut cache)); }

(run it! You'll see it calculates fibonacci(100) in no-time. Note that I did change the result to a u128,

because fibonacci(100) is larger than 64 bits.)

Actually, this pattern is so common, that there are standard tools to do it. For example,

the memoize crate allows you to simply annotate a function. The code to do the

caching is then automatically generated.

use memoize::memoize;

#[memoize]

fn fibonacci(n: u64) -> u128 {

match n {

0 => 1,

1 => 1,

n => fibonacci(n-1) + fibonacci(n-2),

}

}

fn main() {

println!("{}", fibonacci(100));

}One thing you may have observed, is that this cache should maybe have a limit. Otherwise, if you call the function often enough, the program may start to use absurd amounts of memory. To avoid this, you may choose to use an eviction strategy.

The memoize crate allows you to specify this by default, by specifying a maximum capacity or a time to live (for example, an entry is deleted after 2 seconds in the cache). Look at their documentation, it's very useful.

Lazy evaluation and iterators

Iterators in Rust are lazily evaluated. This means they don't perform calculations unless absolutely necessary. For example if we take the following code:

fn main() { let result = || { vec![1, 2, 3, 4].into_iter().map(|i: i32| i.pow(2)).nth(2) }; println!("{}", result().unwrap()); }

Rust will only do the expensive pow operation on the third value, as that is the only

value we end up caring about (with the .nth(_) call). If you combine many iterator calls after each other

they can also be automatically combined for better performance. You can find the available methods on iterators

in the Rust documentation.

I/O buffering

Programs communicate with the kernel through system calls. It uses them to read and write from files, print to the screen and to send and receive messages from a network. However, each system call has considerable overhead. An important technique that can help reduce this overhead is called I/O buffering.

Actually, when you are printing, you are already using I/O buffering. When you print a string in Rust or C, the characters are not individually written to the screen. Instead, nothing is shown at all when you print. Only when you print a newline character, will your program actually output what you printed previously, all at once.

When you use files, you can make this explicit. For example, let's say we want to process a file 5 bytes at a time. We could write:

use std::fs::File; use std::io::Read; fn main() { let mut file = File::open("very_large_file.txt").expect("the file to open correctly"); let mut buf = [0; 5]; while file.read_exact(&mut buf).is_ok() { // process the buffer } }

However, every time we run read_exact, we make (one or more) system calls. But with a very simple change, we can reduce that

a lot:

use std::fs::File; use std::io::{BufReader, Read}; fn main() { let file = File::open("very_large_file.txt").expect("the file to open correctly"); let mut reader = BufReader::new(file); let mut buf = [0; 5]; while reader.read_exact(&mut buf).is_ok() { // process the buffer } }

Only one line was added, and yet this code makes a lot fewer system calls. Every time read_exact is run,

either the buffer is empty, and a system call is made to read as much as possible. Then, every next time

read_exact is used, it will use up the buffer instead, until the buffer is empty.

Lock contention

When lots of different threads are sharing a Mutex and locking it a lot, then most threads to spend most of their time waiting on the mutex lock, rather than actually performing useful operations. This is called Lock contention.

There are various ways to prevent or reduce lock contention.

The obvious, but still important, way is to really check if you need a Mutex.

If you don't need it, and can get away by e.g. using just an Arc<T> that immediately

improves performance.

Another way to reduce lock contention is to ensure you hold on to a lock

for the minimum amount of time required. Important to consider for this

is that Rust unlocks the Mutex as soon as it gets dropped, which is usually at the end of scope

where the guard is created. If you want to make really sure the Mutex gets unlocked, you can manually

drop it using drop(guard).

Consider the following example:

use std::thread::scope; use std::sync::{Arc, Mutex}; use std::time::{Instant, Duration}; fn expensive_operation(v: i32) -> i32 { std::thread::sleep(Duration::from_secs(1)); v+1 } fn main() { let now = Instant::now(); let result = Arc::new(Mutex::new(0)); scope(|s| { for i in 0..3 { let result = result.clone(); s.spawn(move || { let mut guard = result.lock().unwrap(); *guard += expensive_operation(i); }); } }); println!("Elapsed: {:.2?}", now.elapsed()); #}

Here we lock the guard for the result before we run the expensive_operation.

Meaning that all other threads are waiting for the operation to finish rather than

running their own operations. If we instead change the code to something like:

use std::thread::scope; use std::sync::{Arc, Mutex}; use std::time::{Instant, Duration}; fn expensive_operation(v: i32) -> i32 { std::thread::sleep(Duration::from_secs(1)); v+1 } fn main() { let now = Instant::now(); let result = Arc::new(Mutex::new(0)); scope(|s| { for i in 0..3 { let result = result.clone(); s.spawn(move || { let temp_result = expensive_operation(i); *result.lock().unwrap() += temp_result; }); } }); println!("Elapsed: {:.2?}", now.elapsed()); #}

The lock will only be held for the minimum time required ensuring faster execution.

Moving operations outside a loop

It should be quite obvious, that code inside a loop is run more often than code outside a loop. However, that means that any operation you don't do inside a loop, and instead move outside it, saves you time. Let's look at an example:

#![allow(unused)] fn main() { fn example(a: usize, b: u64) { for _ in 0..a { some_other_function(b + 3) } } }

The parameter to the other function is the same every single repetition. Thus, we could do the following to make our code faster:

#![allow(unused)] fn main() { fn example(a: usize, b: u64) { let c = b + 3; for _ in 0..a { some_other_function(c) } } }

Actually, in a lot of cases, even non-trivial ones, the compiler is more than smart enough to do this for you. The assembly generated for the first example shows this:

example::main:

push rax

test rdi, rdi

je .LBB0_3

add rsi, 3 ; here we add 3

mov rax, rsp

.LBB0_2: ; this is where the loop starts

mov qword ptr [rsp], rsi

dec rdi

jne .LBB0_2

.LBB0_3: ; this is where the loop ends

pop rax

ret

However, in very complex cases, the compiler cannot always figure this out.