Course Overview

This is the homepage for the embedded systems lab course. You can find most course information here.

This course is part of the CESE masters programme. This course is a continuation of the curriculum of Software Fundamentals and Software Systems.

For students who did not follow Software Fundamentals or Software Systems

Software Fundamentals and Software Systems are marked as a prerequisite for this course. In this course we will apply a lot of the things you learned in those courses. We will also be working with Rust, a language that we taught during these two prerequisite courses.

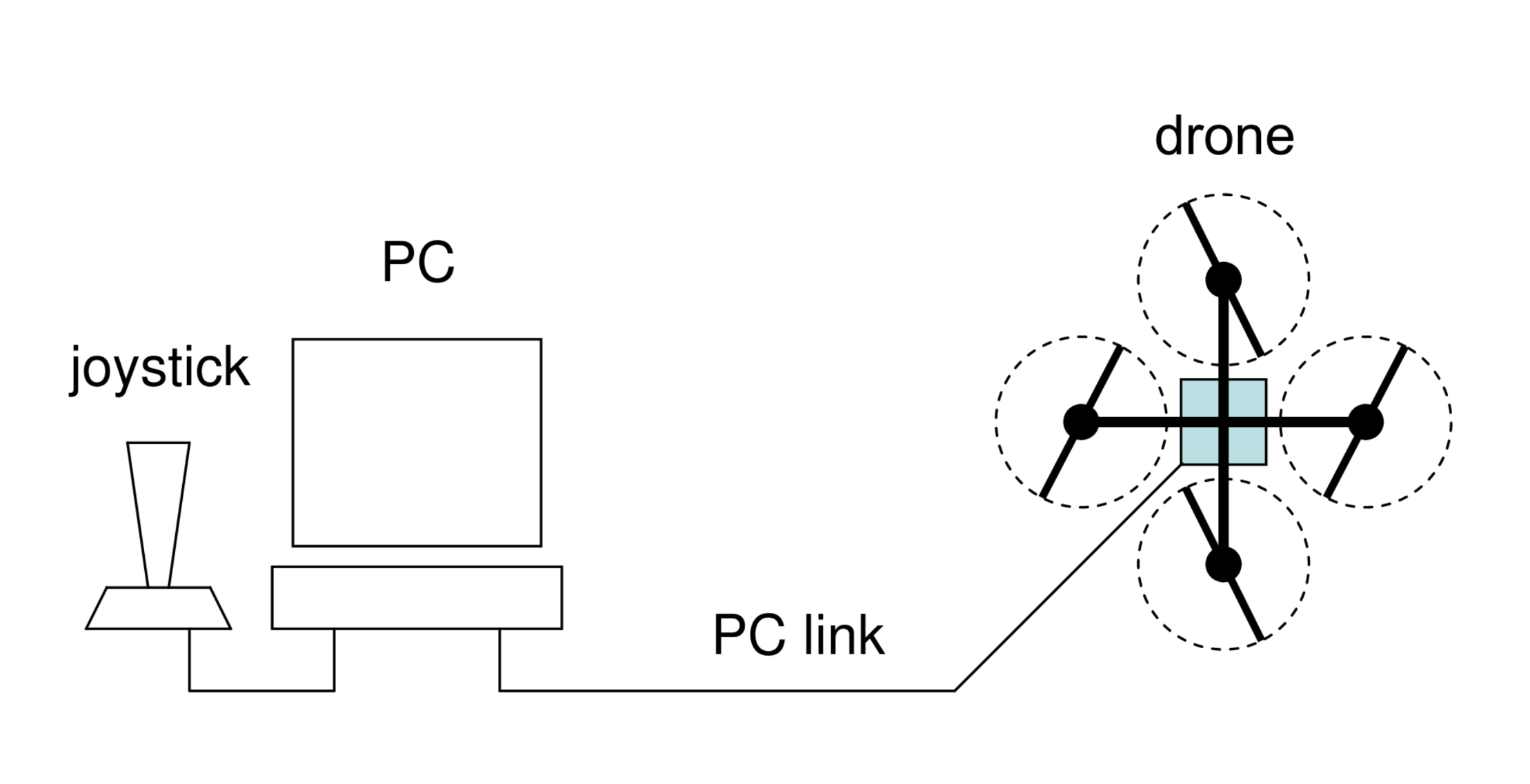

This highly multi-disciplinary course comes with a lab project where teams of 4 students each will have to develop an embedded control unit for a tethered electrical model quad rotor aerial vehicle (the Quadrupel drone), in order to provide stabilization such that it can hover and (ideally!) fly, with only limited user control (one joystick). The control algorithm (which is given) must be mapped onto a home-brew PCB holding a modern nRF SoC interfacing a sensor module and the motor controllers.

The students will be exposed to simple physics, signal processing, sensors (gyros, accelerometers), actuators (motors, servos), basic control principles, and, of course, embedded software (in Rust!) which is the programming language to be used in order to develop the control system.

The course was developed by Arjan van Gemund, a former member of the Embedded and Networked Systems group.

Signup procedure

To take part in this course, sign up is required. You can sign up here: https://forms.office.com/e/ZagW3zxP0t NOTE: Sign up closes @ 13:00 on the 11th February

Course Format

The course is offered during the 3rd quarter of the academic year (feb-apr), and is centered around a lab. Students are split into teams (approximately 3-4 students per team). Each team is responsible for the entire design and implementation trajectory leading up to a working flight demonstrator. The course is compulsory for CESE, and a few seats are available for CS, EE, and other students. The teams will be formed based on skills, type of masters, and organizational issues (black-out dates). The quality of the end result (flight demonstrator plus written report) and the student's personal involvement and contribution determines the course grade.

This course is compulsory for CESE students. Students of other MSc programs may join barring availability; the number of seats for this course is limited by the few quadcopters that we have.

Lab facilities are available under the supervision of two Teaching Assistants throughout the entire course period during the 4-hour labs. The teams are scheduled in such a way that each team has weekly access to the lab facilities during one of three slots. In total each team has 8 labs (i.e., 32 hours) over the entire course period.

Of course, much of the team work, such as meetings, background study, programming, all of which do not require lab equipment (i.e., supervision) (must) be performed outside lab hours. As the project work will most definitively exceed the 32 (supervised) lab hours (more like some 120 hours!), this unsupervised mode of team work has been shown to be a crucial success factor within the project.

Next to the lab, we have two lectures per week. Lectures include QR mechanics, actuators, servos, sensors, elementary control theory, and embedded programming. The purpose of the lectures is to provide the information necessary to successfully perform your lab project.

Staff

The lectures of this course will be given by Koen Langendoen et al.

During the labs you will mostly interact with the TA team

- Vivian Dsouza

- Vivian Roest

- Jana Dönszelmann

- Hao Liu

- Shashwath Suresh

- George Hellouin de Menibus

- Felipe Perez

Contact

If you need to contact us, you can find us at the course email address: emb-syst-lab-ewi@tudelft.nl.

Deliverables

To pass the course each team must successfully design, demonstrate, and document a QR control system (as mentioned earlier), as described in the assignment. The lecture slides and an extensive set of relevant information (documentation, links, software) collected on the Project Resources page are available for reference. Project Deliverables and Grading The project deliverables are

- Demonstrator: Your project will be assessed in the final lab session (week 3.9). During this session your code, as is, has to be demonstrated on the Quadrupel drone in order to obtain your grade for the demonstrator (D, makes up 75% of the total course grade G). NOTE: no demo, no grade.

- Technical Report: The technical report must be submitted in PDF format (max 10 pages, including figures, tables, appendices, see assignment document) on your git repository, by SUNDAY THE 13th of April. The report must contain everything you deem relevant. The point here is that a team must be able to communicate all information relevant to the project within 10 pages. The report makes up 25% of your grade (

R). The course gradeGequalsG = 0.75 D + 0.25 R iff D >= 5 and R >= 5, otherwise the course is failed, and no grade is submitted to the administration. Reports that are submitted after the deadline will not be accepted, (i.e., R = 0, which implies you fail the course!). - Source Code: Each team must submit all their source files at the time of the actual demonstration BY 13th OF APRIL on their git repository (the last commit before the deadline counts). The purpose of this is to check for fraud and to evaluate the nature of each student's personal code contribution to the team result.

Also look at Handing In.

NOTE: other sources of information submitted with the source code such as figures, tables, log files, etc., effectively using the folder as a backdoor to complement the team report with additional data that didn't fit into the hard 10-page limit, will be ignored.

Grading of the demonstrator

Your final grade (the D part) consists of several parts. Together, safety features, manual mode, and full control can get you to a 6.0. On top of that, raw mode can get you up to 2 points, height control up to 1

, wireless up to 1, and your gui and any extra features come together to another point. This is, of course, capped at a 10.

Course Schedule

Lectures start on Monday, February 10th, 10:45 (kick off), see mytimetable for the actual schedule. Labs are at building 36 low-rise, rooms H, J, K, L and M in on Tuesday 08:45 - 12:30 xor Wednesday 08:45-12:30 xor Thursday 08:45-12:30.

Week schedule:

| Week | Contents |

|---|---|

| 3.1, Monday | Course introduction and lab instructions (slides), System Architecture, QR Mechanics and Control (slides) |

| 3.1, Thursday | Embedded Programming |

| 3.2, Monday | Control Theory (slides) |

| 3.2, Tuesday-Wednesday-Thursday | First lab session |

| 3.3, Monday | Signal Processing (slides) |

| 3.4, Monday | QR Control (slides) |

| 3.6 Lab session | interim exam, yaw control |

| 3.9, Lab session | D(emonstration) day! |

Note: The final demonstration lab sessions in Week 3.9 are on Monday 7th April, Tuesday 8th April, Wednesday 9th April and Thursday 10th April. The demonstrations take place in the same room as the labs. The deadline for handing in the report and code is Sunday 13th April.

Lab Timeline

This is a general plan that should not be followed to the letter, but is intended to serve as a general guideline. It lists the most important lab milestones.

Note that the lab time should be used to perform the tests for which you NEED the Quadcopter.

Preparation (BEFORE 1st lab):

- Familiarize with HW

- setup your toolchain

- compile and upload the provided code

- Protocol design and implementation on the flight controller board

1st lab:

- Familiarize with HW

- connect joystick and try to get data from it

- connect the control board, and try to run the template

- Test protocol

- Implement manual mode

- Work on safety features (see Safety Checklist)

Preparation (BEFORE 2nd lab):

- Implement data logging

- Test data logging

- If you have not done so yet, implement the safety features (see Safety Checklist)

2nd lab:

- Demonstrate protocol

- Test manual mode

Preparation (BEFORE 3rd lab):

- Implement calibration

- Implement yaw control (DMP)

3rd lab:

- Demonstrate manual mode

- Test calibration

- Test yaw control (DMP)

Preparation (BEFORE 4th lab):

- Implement roll and pitch control (DMP)

- Start on filters (Butterworth 1st order, Kalman)

- Profile your code!

4th lab:

- Test and demonstrate yaw mode

- Test roll and pitch control (DMP)

Preparation (BEFORE 5th lab):

- Continue work on filters (Butterworth 1st order, Kalman)

- Implement height control

- Implement wireless

5th lab:

- Test and demonstrate full control using DMP

- Test Kalman filter

- Test wireless

- Test height control

Preparation (BEFORE 6th lab):

- Profile your code some more

- Finalize full control using Kalman

6th lab:

- Test and demonstrate full control using Kalman

- Preliminary demonstration + free advice

- Fine tuning

7th lab:

- Fine tuning

8th lab (week 3.9):

- Formal demonstration and grading

Lecture slides

Introduction

The CESE4030 Embedded Systems Laboratory aims to provide MSc students ES, CS, CE, EE, and the like, with a basic understanding of what it takes to design embedded systems (ES). In contrast to other courses on ES, especially those originating from the EE domain (traditionally the domain where ES originate from), in this course, the problem of embedded systems design focuses on using standard, programmable hardware components, such as microcontrollers, and subsequently connecting them and programming them, instead of developing, e.g., new ASICs. The rationale is that for many applications, the argument in favor of ASICs (large volumes) simply doesn’t outweigh the advantages of using programmable hardware.

Consequently, the course focuses on embedded software. The goal of the 5 ECTS credits course is not to master the multidisciplinary skills of embedded systems engineering, but rather to have the student understand the basic principles and problems, develop a systems view, and to become reasonably comfortable with the complementary disciplines.

Project

The project chosen for this course is to design embedded software that controls and stabilizes an unmanned aerial vehicle (UAV), commonly known as a drone. In particular, the course centers around a quad-rotor drone, dubbed the Quadruple, developed (brewed!) in house by the Embedded Software group. This application has been chosen for a number of reasons:

-

The application is typical for many embedded systems, i.e., it integrates aspects from many different disciplines (mechanics, control theory, sensor and actuator electronics, signal processing, and last but not least, computer architecture and software engineering).

-

The application is contemporary. Today’s low-cost drones can be flown with ease because of the embedded software that stabilizes the drone by simultaneously controlling roll, pitch, and yaw1. Without such control software, even experienced pilots find it difficult to fly a drone in (windy) outdoor conditions without crashing a few seconds after lift off.

-

The application is typical for many air, land, and naval vehicles that require extensive embedded control software to achieve stability where humans are no longer able to perform this complicated, real-time task. Professional aerial vehicles such as helicopters, most airline and fighter jets totally rely on ES for stability. This also applies to submarines, surface ships, missiles, satellites, and space ships. Today’s push towards self-driving cars is another domain that critically depends on much of the same technology.

-

Last, but certainly not least, quad rotor drones are great fun, as shown by the rapidly increasing commercial interest in these toys (and this course).

Although designing embedded software that would enable a drone to autonomously hover at a given location or move to a specified location (a so-called autopilot) would by far exceed the scope of a 5 EC credit course, the course project is indeed inspired by this very ambition! In fact, the project might be viewed as a prototype study aimed to ultimately design such a control system, where a pilot would remotely control the drone through a single joystick, up to the point where the UAV is entirely flown by software

-

roll, pitch, and yaw are the three angles that constitute a drone’s attitude in 3D. See Theory of Operation ↩

System Setup

The ultimate embedded system would ideally be implemented in terms of one chip (a SoC, system on chip) which would easily fit within a drone’s limited payload budget. Instead, our prototype ES will be implemented on a custom flight controller board (PCB) holding various chips (processor, sensors, etc.), which provides a versatile experimentation platform for embedded system development. The system setup is shown in Figure 1. The ES receives its commands via a so-called PC link from a so-called ground station, which comprises a PC (laptop) and a joystick. The PC link can be operated in (1) tethered, and (2) wireless mode. Although wireless mode is the ultimate project goal, tethered flight will be most extensively used. In this mode the drone is connected to the ground system through a USB cable providing low-latency and high-bandwidth communication. The motors are powered by a LiPo battery carried by the drone, whose charging state needs to be checked at all times as operating these batteries at a too low voltage ruins them instantly. Charging the batteries takes about an hour, and provides for 15 minutes or more of continuous flight. More information on the Quadrupel drone can be found in other places on this website.

Rather than resorting to expensive sensors, electronics, FPGAs, etc., we explicitly choose a low-cost approach, which opens up a real possibility for students inspired by this course to continue working with drones and/or ES on a relatively low budget; the price tag for a Quadrupel drone is around €250. Hobby model drones are relatively low-cost due to their simplicity. As the rotors do not feature a swash plate there is no rotor pitch control. Consequently, rotor thrust must be controlled through varying rotor RPM (revolutions per minute).

The four rotors control vertical lift, roll, pitch, and yaw, respectively, by varying their individual rotor RPM (see Theory of Operation). The accelerometer and gyro sensors required to derive drone attitude (which is the minimal information for a simple autopilot application) are also low cost. By using a standard microcontroller (with integrated Bluetooth support), the Quadrupel drone can be simply programmed in Rust with an open-source compiler (rustc, though used through cargo), avoiding the need for an expensive toolchain.

The PC acts as a development and upload platform for the drone. The PC is also used as user interface / ground station, in which capacity it reads joystick and keyboard commands, transmits commands to the ES, receives telemetry data from the ES, while visualizing and/or storing the data on file for off-line inspection. The PC and the drone communicate with each other by means of a RS232 interface (both in tethered and wireless mode).

Of course, the low-cost approach is not without consequences. Using low-cost sensors introduces larger measurement errors (drift, disturbance from frame vibrations) which degrades computed attitude accuracy and control performance. Using a low-cost microcontroller reduces the performance of the designs; the selected ARM Cortex M0 CPU, for example, runs at 14 MIPS and lacks floating-point arithmetic. Nevertheless, experiments have shown that the low-cost setup is more than capable to perform drone control, and provides an excellent opportunity for students to get fully acquainted with embedded systems programming in a real and challenging application context, where dealing with limited-performance components is fact of life.

Software setup

To run the template project provided on gitlab, you need to install the following:

We expect you to use Linux for this course. It is not inherently impossible to run the software on Windows or OSX, but it has not been tested, and we will provide no support. If you're new to Linux, we highly recommend Fedora Linux to get started.

You might find that many online resources will recommend Ubuntu to get started, but especially for Rust development this may prove to be a pain. If you install Rust through the installer, it will work, but if you installed rust through

aptit might not. Fedora is also quite an easy introduction to Linux, but will likely provide a better experience.

The rust nightly compiler

- We recommend you update using

rustup updateto get the newest version of the rust compiler, even if you already had a version of the rust compiler installed. - If you don't have rust installed, INSTALL RUSTUP FIRST. Follow these instructions: https://www.rust-lang.org/tools/install

- If you use either Ubuntu or Debian, the version of rust that came with your operating system might be very old (check using the command shown above). Download a new version of rust using rustup, using the installation instructions on https://www.rust-lang.org/tools/install

- Specifically install the nightly version of rust by running

rustup toolchain add nightly

The cross-compiler for ARM architectures

- This is actually very simple to install, use

rustup target add thumbv6m-none-eabi. - If you followed Software Systems before, you should've done something similar for the Embedded Systems assignment but with

thumbv7m-none-eabias a target instead. - You can check if you have this target installed with

rustup target list

Cargo-binutils.

- You can install this with

cargo install cargo-binutils - If you have issues with this tool, you can try running

rustup updateand thenrustup component add llvm-tools-preview - Make sure that the binaries of cargo-binutils are in your path. If you're not sure, look if your PATH (run

echo $PATH) contains/home/yourname/.cargo/bin

Using the template

You can upload code to your drone controller board, simply by running cargo run in the root folder

of your project. The runner will automatically find the correct serial port to use. We do recommend

you carefully read the README.md of your template, and the comments in the source code of the template.

Especially the comments in the main.rs file of the runner folder.

We recommend you to use the template provided to you, as well as the

tudelft-quadrupellibrary. However, we are almost certain it won't suit everyone's needs. Although you are required to write your code in Rust, you are allowed to either ignore the library code we provide you and create your own, or modify it to suit your needs.You can find the template source code and library source code on Gitlab, as well as our upload script

If you'd like to modify the library, it might be good to know how you can easily use a modified version of the library in your project.

In your

Cargo.tomlyou can specify dependencies like this:tudelft-quadrupel = "1.0"But you can replace this with:

# note that this doesn't work very nicely in group projects, # since everyone needs the library at this path for it to work tudelft-quadrupel = {path="../some/other/path/on/your/system"}However, you can still also specify a version as a fallback for other people in a group:

tudelft-quadrupel = {version="1.0.0", path="../some/other/path/on/your/system"}Lastly, you can specify a git dependency which might be preferable:

tudelft-quadrupel = { git="git+https://gitlab.ewi.tudelft.nl/cese/embedded-systems-lab/tudelft-quadrupel.git", branch="some-branch" }The repository can of course be your own, as long as it's publicly available

Uploading to the board

To upload to the board, you might need to be in the

dialout(oruucp, depending on your system) group on linux to have permission to use the serial port. You will see that the/dev/ttyUSB0file is owned by a group that you need to be in to access it (ls -la /dev/ttyUSB0). You can check whether you have the required group by running thegroupscommand.It is possible that the owning group of the file does not actually exist, in that case you need to create it. To do so, refer to this archwiki page

It is also possible to set up udev rules for this.

To upload code to the board, you can just run cargo run --release as mentioned above. However, before every upload the chip should be reset.

Usually, this works by pressing the small button on the board (which temporarily cuts power to it) or by flipping the switch off and on. You can also unplug and replug the board to your pc to accomplish this (in a few rare cases this might even be necessary, trying a different USB port might help in that case as well. However, this shouldn't happen often). After that you can run the cargo run --release

and the upload should work.

In general: press the button on the board before every upload!

Assignment

A note on the meaning of "panic"

The following might prove to be a little confusing. In this lab manual, we will refer to something called "Panic mode". This is a mode in which the drone can be, which carefully brings the drone into a safe state, and then automatically switches to "Safe mode" when such a safe state has been achieved. To bring the drone to a safe state in panic mode you will have to for example, spin down all the motors.

This is entirely separate from the Rust concept of code panicking. When Rust code panics, there is generally not much you can do to keep running and keep your drone in the air. You might want to gracefully shut down your motors in your panic handler as a safety measure, but it's totally separate from "Panic mode".

So to reiterate one more time:

- "Panic mode" refers to one of the multiple modes the drone can be in

- Rust code panicking means that some kind of unrecoverable error like an index-out-of-bounds or integer underflow occurred in your code

The team assignment is to develop (1) a working demonstrator and (2) associated documentation (a report) of a control system, based on the quad-rotor drone available. In an uncontrolled situation, the drone, inherently unstable, will almost immediately engage in an accelerated, angular body rotation in an arbitrary direction, which typically results in a crash within seconds as an unskilled human will not be able to detect the onset of the rotation quickly enough to provide compensatory measures with the joystick. The embedded controller’s purpose is to control 3D angular body attitude (φ, θ, ψ) and rotation (p, q, r), as defined in the Theory of operation and Signal definitions. This implies that the body rotation will not just uncontrollably accelerate, but will be controlled by the embedded system according to the setpoints received from the joystick (specifying roll, pitch, and yaw). In effect, the embedded system acts as a stabilizer allowing a (slow) human to manually control drone attitude using the joystick.

The lab hardware available to the team are:

- a twist handle joystick,

- 2 test boards, including the microcontroller and sensors

- a drone

You are expected to have a computer running linux to program the board and to fly the drone. It might be possible ot use other operating systems or VMs for this course, but it's not well-tested, so be prepared for some troubles to set that up.

The drone shall be controllable through the joystick, while a number of parameters shall be controlled from the PC user interface. The status of the system shall be visualized at the PC screen, as well as on the drone (using the 4 LEDs on the flight controller board). Whereas the hardware is provided, in principle, all software must be designed by the team. Code on the Linux PC and drone shall be programmed in Rust. The PC-side GUI may be programmed in any programming language. A description of the required system setup, together with the signal conventions appears in the Signal definitions.

Safety Requirements

Although the project intentionally has some degrees of design freedom, the following requirements must be fulfilled. The first set of requirements relate to safe Quadrupel operation such that this valuable piece of equipment is not damaged:

-

Safety The ES must have a safe state (for drone and operator) that shall be reached at all times. In this state, the four motors are de-energized (zero RPM). This shall be the initial state at application startup, as well as the final state (either at normal exit or abort). Consequently, joystick states other than zero throttle and neutral stick position are illegal at startup, upon which the system shall issue a warning and refrain from further action. Note, that the safe state is not to be implemented by merely zeroing all setpoints at the PC side as this does not guarantee that the ES (when crashed) will be able to reach this state.

-

Emergency Stop (Panic mode) It shall be possible to abort system operation at all times, upon which the system briefly enters a panic state, after which it goes to safe state. In the panic state rotor RPM is set at a sufficiently low rate to avoid propeller damage, yet at a sufficiently high rate to avoid the drone falling to ground like a brick causing frame damage. Panic mode should last for about a second, after which the drone must go into safe state. Aborting the system must be possible by pressing joystick button(s), and keyboard key(s) (see the Interface requirements)

-

Battery Health The LiPo batteries powering the drone need to be handled with care as draining them will inflict fatal damage. In particular, the battery voltage should never drop below 10.5 Volt. As soon as the battery voltage (read out over the internal ADC) crosses the threshold, the drone should enter panic mode. Preferably, the pilot should be warned ahead of time, so they can land safely without the risk of getting his flight aborted at an inconvenient moment.

-

Robust to noise As sensor data is typically noisy and may contain spurious outliers, feeding raw sensor data to the controllers may result in large variations of the four rotor control signals ae1, ae2, ae3, and ae4, possibly causing motor stalling. The on-board sensing module (InvenSense MPU-6050) therefore includes a Digital Motion Processor (DMP) that filters and fuses the raw accelerometer and gyro readings to arrive at a smoothed stream of attitude readings. Note however, that in the final stage of the project, the filtering must be done “by hand” on the microcontroller itself and care must be taken to remove obvious outliers and filter vibration noise such that the sensor data is sufficiently stable and reliable to be used in feedback control1

-

Reliable communication The PC link must be reliable, as any error in the actuator setpoints may have disastrous consequences, especially when the drone has lift-off. A wrong rotor RPM setpoint may immediately tilt the drone, causing a crash. Note that dependability is a key performance indicator in the project: a major incident that is caused by improper software development (i.e., improper coding and testing) may lead to immediate disqualification. Testing communication status at both peers may involve sending or receiving specific status messages to ascertain that both peers are operating and receiving proper data.

-

Dependability The same dependability standards apply to the embedded system itself. If, for some reason, PC link behaviour is erratic (broken, disconnected) the code should immediately signal the error and go to panic mode

-

Robustness Last but not least, the embedded control code must be as reliable as possible. An important aspect is the proper use of fixed point arithmetic for representing and handling of sensor values, filter values, controller values, various sorts of intermediate values, parameter values, and actuator values (the Cortex M0 processor does not provide floating-point arithmetic instructions). On the one hand, the values must not be too small, which would cause loss of precision (resolution). On the other hand, the values must not become too large, as this may cause integer overflow, with possibly disastrous consequences.

Functional Requirements

The next requirements relate to proper functionality of the ES. As mentioned earlier, the ES is intended to act as a controller that provides an order of stabilization to the drone attitude and rotation, such that the drone can be adequately controlled by a human. The requirements are as follows:

-

PC The PC shall act as the user’s drone control interface. After verifying that the joystick settings are in neutral, it shall upload the ES program image to the drone (which at that point runs a simple bootloader that waits for a valid program to execute), read inputs from the keyboard and the joystick, send commands to the ES, read telemetry data from the ES, and visualize and store the ES data. At mission completion (or abort) the PC shall set the ES in the safe state, have the ES program terminate, which returns control to the bootloader that will wait for the next program to run.

-

Drone The ES shall be operated in at least six control modes, safe, panic, manual, calibration, yaw control, full control. The raw mode, height control and wireless mode are optional, but required for a good grade. In all modes except for safe and panic, the joystick and keyboard produce the control signals lift, roll, pitch, and yaw (see the Interface requirements for the joystick and keyboard mappings). The demonstrator shall commence in safe mode at startup. A flight experimentation sequence always starts with selecting the proper mode, prior to ramping up motor RPM.

-

Safe mode In safe mode, the ES should ignore any command from the PC link other than the command to either move to another mode or to exit altogether. Effectively, the ES should keep the motors of the drone shutdown at all times.

-

Panic mode In panic mode the ES commands the motors to moderate RPM for a few seconds such that the drone (assumed uncontrollable) will still make a somewhat controlled landing to avoid structural damage. In the panic state, the ES should ignore any command from the PC link, and after a few seconds should autonomously enter safe mode.

-

Manual mode In manual mode the ES simply passes on

lift,roll,pitch, andyawcommands from the joystick and/or keyboard to the drone without taking into account sensor feedback. Note that the keyboard must also serve as control input device (for instance, pressing ’a’ incrementslift, pressing ’z’ decreaseslift), such that the actual commands being issued to the ES are the sum of the keyboard and joystick settings. This enables the keyboard to be used as static trimming device, producing a static offset, while the joystick produces the dynamic relative control component. Also see the Interface requirements which prescribes the key map that must be used. -

Calibration mode In calibration mode the ES interprets the sensor data as applying to level attitude and zero movement. As the sensor readings contain a non-zero DC offset, even when the drone is not moving, the calibration readings thus acquired are subsequently stored during subsequent mode transition to controlled mode, and used as reference during sensor data processing in controlled mode.

-

Yaw-controlled mode In yaw-controlled mode the ES controls drone yaw rotation in accordance with the yaw commands received from the twist handle. In this mode, the joystick control mapping is the same as in manual mode, except for the twist handle (

yaw), which now presents a yaw setpoint to the yaw controller. The yaw setpoint is yaw rate (cf. r). Neutral twist (setpoint 0) must result in zero drone yaw rate (r = 0). Unlike in manual mode, drone yaw should now be independent of rotor speed variations as much as possible, i.e., when the drone starts yawing the ES should automatically adjust the appropriate rotor thrustsae1toae4to counter-act the disturbance. As the yaw sensor may drift with time, an additional trim function (through keyboard keys, see the Interface requirements) must be supplied in order to maintain neutral twist - zero yaw relation. In this mode, the relevant controller parameter (P) should also be controllable from the keyboard (Interface requirements). -

Full-control mode In full-control mode the ES also controls roll and pitch angle (φ, θ), next to yaw rate (r), now using all three controllers instead of just one. The same principles apply: neutral stick position should correspond with zero drone roll and pitch angle. As the drone has a natural tendency for rapidly accelerated roll and pitch rotation (it’s an inherently unstable system), proper roll and pitch control is absolutely required in order to allow the drone to be safely controlled by a human operator. Similar to the yaw control case, proper roll and pitch trimming prior to lift-off is crucial to safely perform roll and pitch control during lift-off as the vehicle has a natural tendency to either pitch or roll due to rotor and weight imbalance. For the keyboard map of the roll and pitch trimming keys see the Interface requirements.

-

Raw Sensors Full-control mode In this mode, the expected behavior is exactly the same as Full Control mode. However, instead of using processed values from the on-board DMP (Digital Motion Processor), it relies on raw sensor data. This requires implementing filtering and sensor fusion as taught in class. Refer to the lecture slides for details: Signal Processing and Integration. Teams may also implement any other filtering technique of their choice, at their own risk (i.e help from TAs is limited). All teams members must be able to explain the theory of the filtering technique used.

-

Height-control mode In height-control mode the drone should maintain the altitude (height) that it currently is at. The pressure reading for the barometer can be used to estimate height. As the barometer is known to be unstable, it may also be used in combination with other sensors to improve stability. Entering height control mode should only be possible from either Full control mode or Raw mode. The drone should maintain the altitude it had when height control mode was activated. The intention is that the pilot can continue flying the drone around (including roll, pitch and yaw) while maintaining a stable altitude. When the pilot touches the throttle on the joystick, height control should be turned off and control should be returned to the previous mode (Full control or Raw control). As with all other modes, panic mode should also always be reachable from height-control mode. For the keyboard map of the height control key see the Interface requirements.

-

Wireless mode In wireless mode, the wired link to the PC is replaced with a wireless radio link. The expected behavior of the ES is the same as above. The on board nRF51822 system on chip supports 2.4 GHz wireless radio communication with protocols such as BLE. Nordic also offers their proprietary radio protocol for a 2.4 GHz radio which you can read about here (see Chapter 16 2.4 GHz RADIO). We recommend implementing this protocol from the specifications as it has been previously tested and is known to work. You can also use a second flight controller board as a transmitter/receiver dongle for your laptop. As this mode can be complicated, any effort to establish wireless communication will be also evaluated for partial points. For the keyboard map of the wireless control key see the Interface requirements.

-

-

Note that the microcontroller only provides integer-arithmetic instructions. Consequently, the filters must be implemented in software using fixed-point arithmetic ↩

System Architecture

As mentioned earlier, the main challenge of the project is to write embedded software, preferably from scratch. However, as the project has to be completed in limited time (7 weeks), a sample skeleton program will be provided that implements the basic ES functionality of communicating commands and sensor readings over the PC link. This program effectively serves as a guide to the library functions operating the various hardware components of the Quadrupel drone. Note that the skeleton program consists of two parts, one for the PC side (e.g., for reading the joystick, and sending/receiving characters over the RS232 link), and one for the drone side (e.g., for reading the sensor values and writing the motor values). The sample program and additional information regarding hardware components and driver protocols are available on the project site. Note that the sample program is purely provided for reference, and a team is totally free to adopt as much or as little as deemed necessary. That is, the team is completely free in developing their software, i.e., the system architecture, component definitions, interface definitions, etc. are specified, implemented, tested, and documented by the team.

System Development and Testing

An important issue in the project is the order of system development and testing. Typically, the system is developed from joystick to drone (the actuator path), and back (the sensor path), after which the yaw, roll, and pitch controllers are sequentially implemented. All but the last stages are performed in tethered mode. Each phase represents a project milestone, which directly translates into team credit. Note: each phase must start with an architectural design that presents an overview of the approach that is taken. The design must first be approved by the TA before the team is cleared to start the actual implementation.

Actuator Path (Safe, Panic, and Manual mode)

First the joystick software is developed (use non-blocked event mode) and tested by visually inspecting the effect of the various stick axes. The next steps are the development of the RS232 software on the PC, and a basic controller that maps the RS232 commands to the drone hardware interfaces (sensors and actuators). At this time, the safe mode functionality is also tested. If the design is fully tested, the system can be demonstrated using the actual drone.

Sensor Path (Calibration mode)

The next step is to implement the sensor path, for now, using the on-board motion processor that provides angular information. Calibration is an important step, and (zeroed) data should be recorded (logged) and presented at the PC under simulated flying conditions (i.e., applying RPM to the motors, and gently rotating the drone in 3D).

Yaw Control

The next step is to introduce yaw feedback control, where the controller is modified such that the yaw signal is interpreted as setpoint, which is compared to the yaw rate measurement r returned by the sensor path, causing the controller to adjust ae1 to ae4 if a difference between yaw and r is found (using a P algorithm). Testing includes verifying that the controller parameter (P ) can be controlled from the keyboard, after which the drone is “connected” to the ES. By gently yawing the drone and inspecting yaw, r, and the controller-generated signals ae1 to ae4 via the PC screen, but without actually sending these values to the drone’s motors, the correct controller operation is verified. Only when the test has completed successfully, the signals ae1 to ae4 are connected to the drone motors and the system is cleared for the yaw control test on the running drone.

Full Control

The next step is to introduce roll, and pitch feedback control, where the controller is extended in conformance with Roll/Pitch control mode using cascaded P controllers for both angles. Again, the first test is conducted with disconnected ae1 to ae4 in order to protect the system. When the system is cleared for the final demonstration, the test is re-conducted with connected ae1 to ae4.

Raw sensor readings

This step involves doing the sensor fusion on the main controller, instead of relying on the sensing module’s motion processor. Experience tells us that the Kalman filter presented in class looks deceivingly simple, yet the details of implementing it with hand-written fixed-point arithmetic are far from trivial. Therefore the effectiveness of the digital filters must be extensively tested. Log files of raw and filtered sensor data need to be demonstrated to the TAs, preferably as time plots (matplotlib in Python or gnuplot). Before flying may be attempted the TAs will thoroughly exercise the combined filter and control of your drone, to safeguard against major incidents resulting from subtle bugs.

Height Control

The implementation of this feature requires coding yet-another control loop to stabilize the drone’s height automatically, freeing the pilot from this tedious task. It may only be attempted once full control has been successfully demonstrated to the TAs. The flight control board does include a barometer whose pressure reading can be used to estimate height. However, experience tells us that it is not a very stable sensor. What other sensors could be used to stabilize height?

Wireless Control

Similarly to height control, wireless control can only be attempted once full control has been sucessfully demonstrated. In the wireless mode the PC link is replaced by a wireless radio link. The particular challenge of this version is the low bandwidth of the link, compared to the tethered version, which only permits very limited setpoint communication to the ES. This implies that ES stabilization of the drone must be high as pilot intervention capabilities are very limited.

Methodology

The approach towards development and testing must be professional, rather than student-wise. Unlike a highly “student-like”, iterative try-compile-test loop, each component must be developed and tested in isolation, preferably outside of lab hours, as to optimize the probability that during lab integration (where time is extremely limited) the system works. This especially applies to tests that involve the drone, as drone availability is limited to lab hours. The situation where there is only limited access to the embedding environment is akin to reality, where the vast majority of development activities has to be performed under simulation conditions, without access to the actual system (e.g., wafer scanner, vehicle) because it either is not yet available, or there are only a few, and/or testing time is simply too expensive.

Safety Checklist

These are the checks TA's will do before attempting to test any new mode on your drone. Make sure these always work when you work on new modes.

- In safe mode, your motors should always have an RPM of 0

- You cannot enter other modes from safe mode if your joystick input is non-zero (eg throttle, steering)

- The following buttons should make your drone go to panic mode

- Joystick fire button

- Escape (keyboard)

- Space bar

- keyboard 1 (not numpad 1)

- The following scenarios should have your drone enter panic mode

- Disconnecting joystick

- Disconnecting cable from drone

- Finally make sure that you use the LED on the board to indicate panic mode

- In panic mode your motors should equalize and gradually ramp down to zero.

Safety Guidelines

A quadcopter is a complicated piece of equipment integrating engineering materials, electronics, mechanics, aerodynamics, high frequency radio and embedded software. Correct installation and operation are a must in order to prevent any accidents from taking place. Therefore you should follow the following safety rules:

- Keep the quadcopters away from people and obstacles. The quad rotors are quite agile and can move at considerable speed. Therefore, it is best to keep them as far away from other people as possible. Also, keep away from obstacles that are either expensive or accident-prone (such as high-tension lines).

- For initial testing hold the quadcopter in one hand, and use the other hand for operating the (joystick); never have another student pilot the quadcopter.

- Make sure the quadcopter is held whenever your code is running on it to avoid it taking off on its own by accident.

- Keep the quadcopters way from humidity, as this will affect the electronics and result in unpredictable behavior or a crashes.

- Always fly under direct supervision of a TA. Flying a quadcopter can be difficult, especially when your software isn't yet fully functional.

- Do not operate a quad rotor if you are tired or in any other sense less acute than normally. Loss of attention can result in crashes and consequential damage. When you fly a drone, keep a line of sight to it.

- Keep the quadcopters and the batteries away from heat sources.

- Make sure you test your code carefully before you fly it on a drone, to prevent crashes. Do not fly a drone directly, after large or untested updates to your software.

- Always check the quadcopter before flight: during flight, parts vibrate and may loosen, such as little screws, the battery connection, or the propeller-holding nuts. Verify before the flight that there are no loose parts, in order to prevent erratic behavior.

- Keep the rotating blades away from other people and do not try to touch them as they can cause deep cuts.

- Wear safety goggles any time your code is running on the drone, to prevent damage to your eyes.

Battery Safety Guidelines

- The LiPo batteries: if used wrongly they can ignite and even explode, for instance when overcharged. Do not use them outside the range of -20 ◦C to 60 ◦C, the voltage should never go below 3 V per battery cell neither should it go above 4.2 V per cell during charging. Avoid serious impact on the batteries and do not use sharp objects on it. Do not short circuit the battery’s wires by, e.g., cutting them with scissors. Store the batteries at room temperature (19 ◦C - 25 ◦C).

- Charge and discharge the LiPo battery with caution, adhering to the guidelines for 3-cell LiPo operation

Reporting

The team report, preferably typeset in LaTeX, should contain the following

- Title, team ID, team members, student numbers and date

- Abstract (10 lines, specific approach and results)

- Introduction (including problem statement)

- Architecture (all software components and interfaces)

- Implementation (how you did it, and who did what)

- Experimental results (list capabilities of your demonstrator)

- Conclusion (evaluate design, team results, individual performance, learning experience)

- Appendices (all component interfaces, component code for those components you wish to feature)

Specific items that must be included are a functional block diagram of the overall system architecture, the size of the Rust code, the control speed of the system (control frequency, the latencies of the various blocks within the control loop), and the individual contributions of each team member (no specification = no contribution). The report should be complete but should also be as minimal as possible. In any case, the report must not exceed 10 pages (A4, 11 point), including figures and appendices. Reports that exceed this limit are NOT taken into consideration (i.e., desk-rejected).

Grading

Each team executes the same assignment and is therefore involved in a competition to achieve the best result. The course grade is directly dependent on the project result in terms of absolute demonstrator and report quality as well as relative team ranking. Next to team grading, each team member is individually graded depending on the member’s involvement and contribution to the team result. Consequently, a team member grade can differ from the team grade.

As this course is about embedded systems, it is mandatory for each team member to have written some embedded code that runs on the drone.

A better result earns more credits. A result is positively influenced by a good design and good documentation (which presents the design and experimental results). A good design will achieve good stability, use good programming techniques, have bug-free software, demonstrates a modular approach where each module is tested before system-level integration, and therefore adhere to the “first-time-right” principle (with respect to connecting to the real drone).

On the debit side, to avoid lack of progress a design may sometimes require excessive assistance from the Teaching Assistants (consultancy minutes), which will cost credits

This is a group project. In case group collaboration is insufficient, caused by one or multiple members, the work of the members in question will not be evaluated at all. You cannot pass this course without sufficient teamwork.

For more information about how grades are calculated see the Deliverables page.

Note: The final demonstration lab sessions in Week 3.9 are on Monday 7th April, Tuesday 8th April, Wednesday 9th April and Thursday 10th April. The demonstrations take place in the same room as the labs. The deadline for handing in the report and code is Sunday 13th April.

Handing in

When we grade your project, we simply take the last commit before the deadline (Sunday 13 April) from the gitlab repository we provide you. We will only look at the main branch, and in general, will not accept late submissions.

Your report should also be in your repository, preferably called report.pdf. For more information, see also Grading

and Reporting, as well as Deliverables.

Lab Order

In the absence of the course instructor, the TAs have authority over all lab proceedings. This implies that each team member is held to obey their instructions. Students that fail to do so will be expelled from the course (i.e., receive no grade).

Safety (systems dependability) is an important aspect of the code development process, not only to protect the drone equipment, but also to protect the students against unanticipated reactions of the drone. The RPM of the rotors can lead to injury, e.g., as a result of broken rotor parts flying around as a result of a malfunction or crash. Therefore, students who are in the immediate vicinity of the drone must stay out of range and wear goggles.

As mentioned at the course web page, lab attendance by all team members is mandatory for reasons of the importance of the lab in the course context, and also because of team solidarity. Hence, the TAs will at least perform a presence scan at the start and at the end of each lab session. Any team member whose aggregate AWOL (absent without leave) is more than 30 minutes will be automatically expelled from the course.

Although it is logical that team members will specialize to some extent as a result of the team task partitioning, it is crucial that each member fully understands all general concepts of the entire mission. Consequently, each team member is expected to be able to explain to the TAs what each of his/her team members is doing, how it is done, and why it is done. The TAs will regularly monitor each team member’s performance. Team members that display an obvious lack of interest or understanding of team operations risk having their individual grades being lowered or even risk being expelled from the course.

Fraud (as well as aiding to fraud) is a serious offense and will always lead to (1) being expelled from the course and (2) being reported to the EEMCS Examination Board. As part of an active anti-fraud policy, all code must be submitted as part of the demonstrator, and will be subject to extensive cross-referencing in order to hunt down fraud cases. It is NOT allowed to reuse ANY computer code or report text from anyone else, nor to make code or text available to any other student attending CESE4030 (aiding to fraud). An obvious exception is the code that was given to you by the course.

an excuse that one “didn’t fully understand the rules and regulations on student fraud and plagiarism at the Delft Faculty of EEMCS” will be interpreted as an insult to the intelligence of the course instructor and is NOT acceptable.

In case of doubt one is advised to first send a query to the course instructor before acting.

Finally, note that the lab sessions only provide a forum where teams can test their designs using the actual drone hardware. However, these sessions are not enough to successfully perform the assignment. An important part of the team work has to be performed outside lab hours, involving tasks such as having team meetings, designing the software, preparing the lab experiments, and preparing (sections of) the report. In order to enable (limited) experimentation outside lab hours, one flight controller board per team can be made available outside lab hours, once a €50 deposit has been made. The deposit is reimbursed once the board has been returned, and it is established that the board is fully functioning.

UAV Theory Of Operation

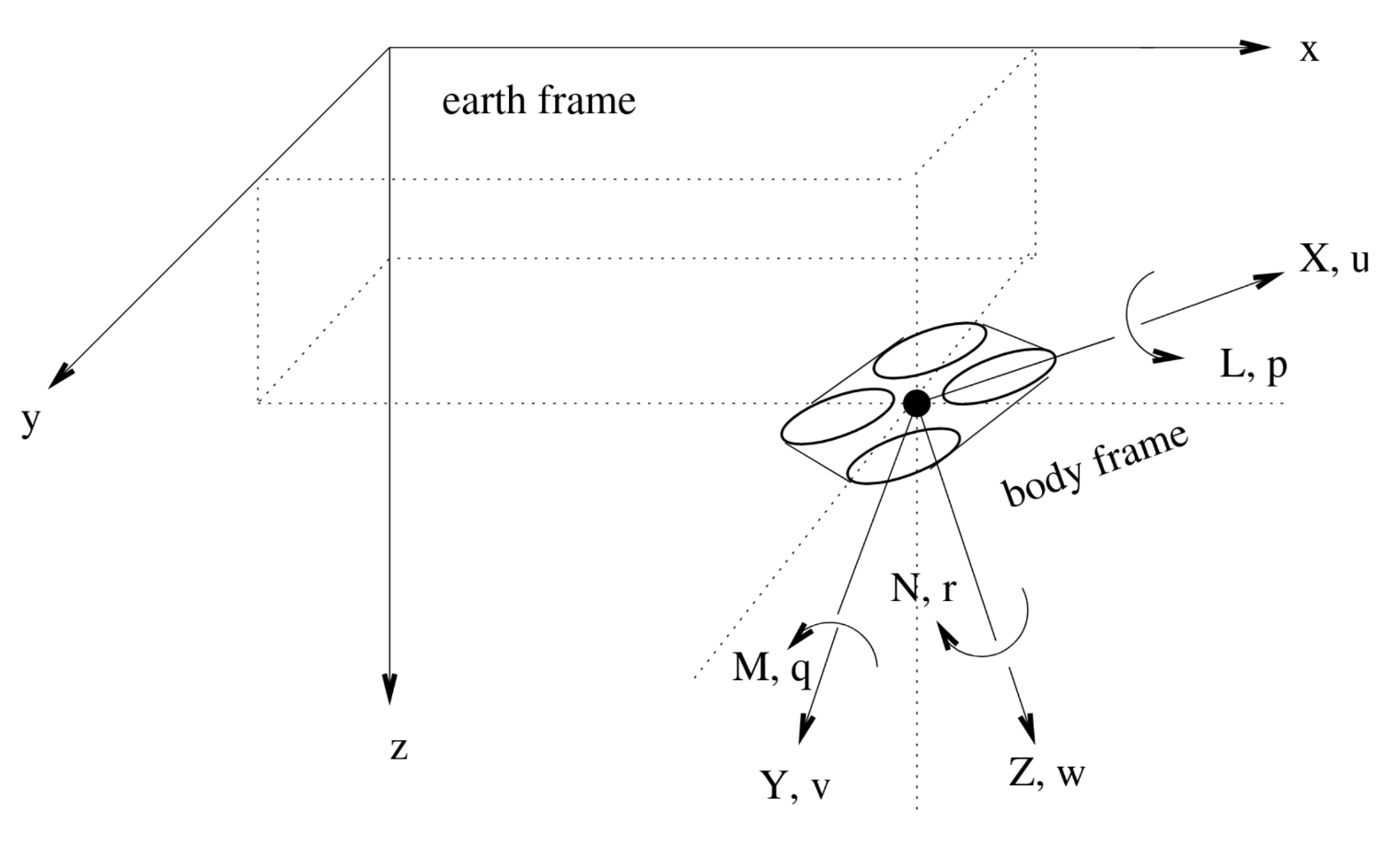

In the figure below, we describe the variables that are standard terminology in describing aerial vehicles location and attitude (NASA airplane standard).

We use two coordinate frames, the earth frame and the (drone) body frame. When the drone (body frame) is fully 3D aligned with the earth frame, both x axes are pointing in the direction of the drone’s flight direction. The y axes are pointing to the right, while the z axes are pointing downward. The variables are defined as follows:

x,y,z: coordinates of the drone CG (center of gravity) relative to the earth frame [m]φ,θ,ψ: drone attitude (body frame attitude) relative to the earth frame (Euler angles) [rad]X,Y,Z: forces on the drone relative to the body frame [N]L,M,N: moments on the drone relative to the body frame [N m]u,v,w: drone velocities relative to the body frame [m s−1]p,q,r: drone angular velocities relative to the body frame [rad s−1]

The forces X, Y, Z result in (3D) acceleration of the vehicle, while the moments L, M, N result in rotation

(change in roll, pitch, yaw). In hover, Z determines altitude (to be controlled by lift), while L, M, N need to

be controlled by roll, pitch, and yaw, as explained later on.

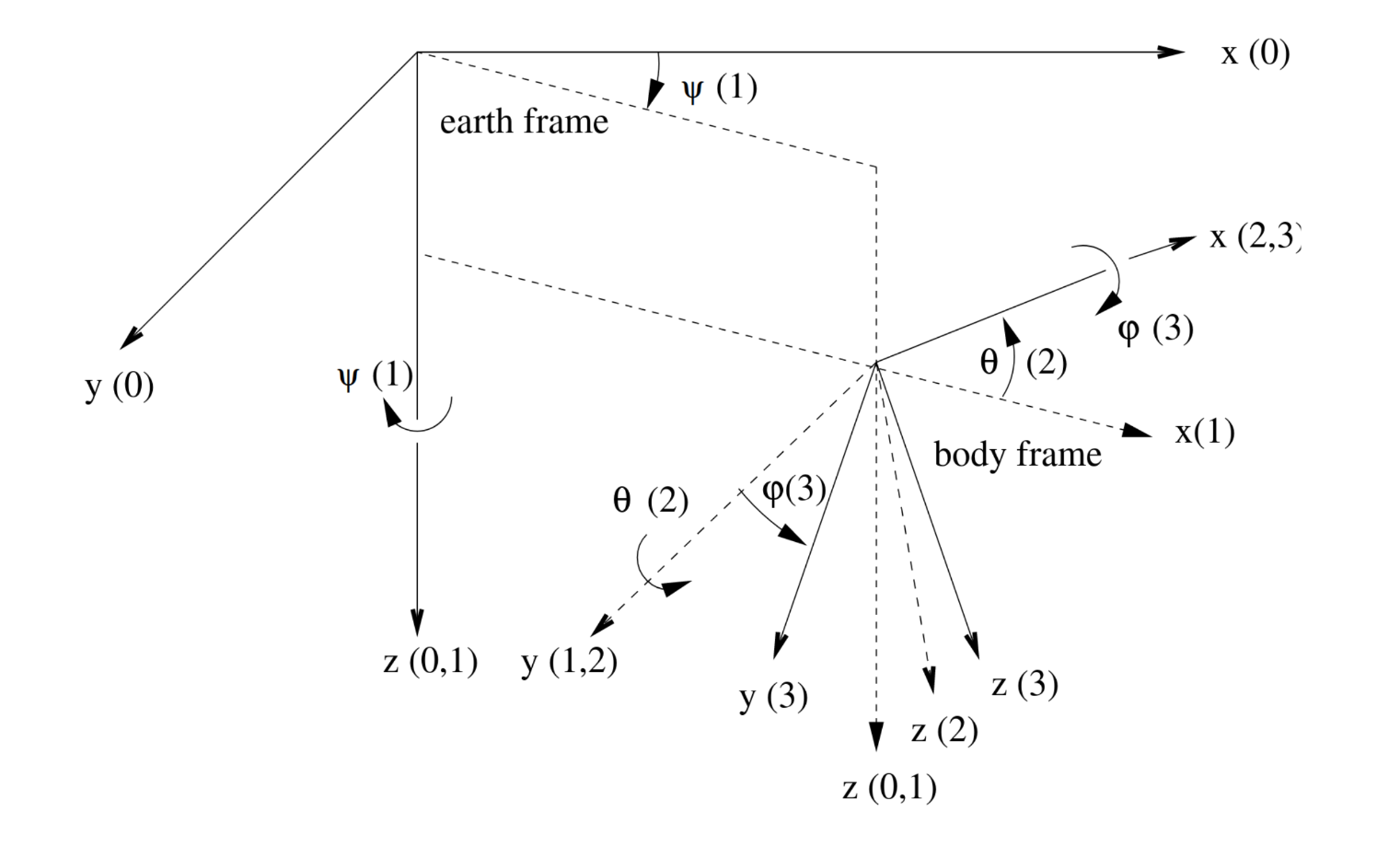

The Euler angles φ, θ, and ψ are not included in the above Figure, as their graphical definition is less trivial. They

describe the attitude of the body frame relative to the earth frame, and are defined in terms of three successive

rotations of the body frame by the angles ψ, θ, φ, respectively1. Before applying the rotations the body frame is

fully aligned with the earth frame. First, the body frame is rotated around the z axis (body frame, earth frame)

by an angle ψ. Next, the body frame is rotated around the y axis (body frame) by an angle θ. Finally, the

body frame is rotated around the x axis (body frame) by an angle φ.

Note, that the order of rotations (ψ, θ, φ) is significant as different rotation orders will produce a different

final body frame attitude. The Euler angle definition is shown in the image below, where the index (i) denotes the

various body frames in the course of rotation (0 initial body frame, 3 final body frame).

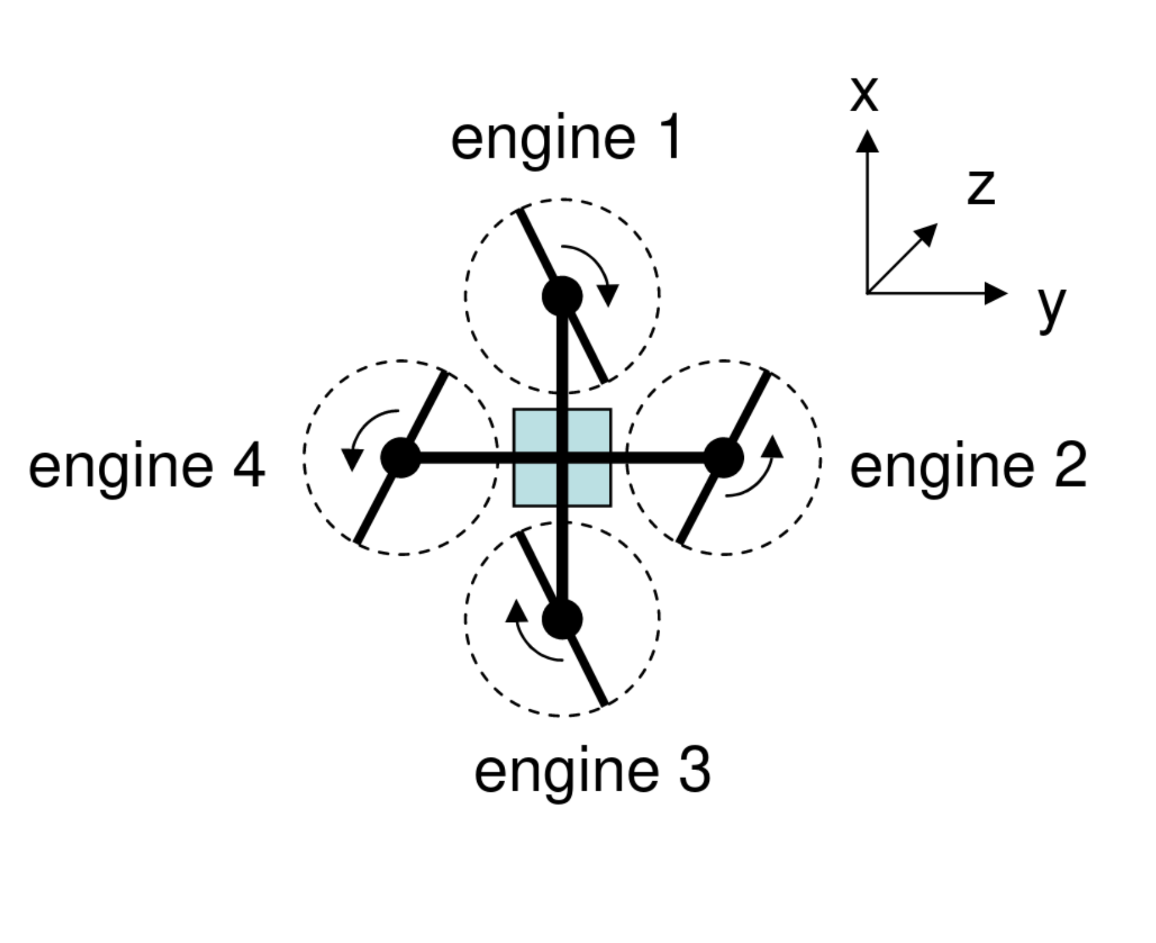

The forces X, Y, Z and moments L, M, N are applied externally through gravity, and the four rotors. The above forces and moments are given by

X = 0

Y = 0

Z =

L =

M =

N =

where denote rotor RPM, and and are drone-specific constants. The above equations directly follow from the rotational direction of the rotors as shown in the image below:

The rotor RPM is approximately proportional to the voltage applied to the engines, which, in turn, is proportional to the duty cycle of the PWM signals generated by the drone interface electronics, which are controlled by (actuator engine i).

Note that refers to incorrect terminology, as

estands for engine, while the drone’s rotors are driven by motors. Switching terminology would be a major undertaking as all developed reference code and documentation refers to these labels, so theeshould be interpreted as electrical motor.

As a result, the above equations can also be directly expressed in terms of the actuator signals to according to

X = 0

Y = 0

Z =

L =

M =

N =

where b′ and d′ are drone-specific constants. Note, that in order to compute the to produce a desired lift

(through ), roll (through ), pitch (through ), and/or yaw (through ), the above system of equations

must, of course, be inverted.

The other variables u, v, w and p, q, r are governed by the standard dynamical and kinematic equations that

govern movement and rotation of a body in space [3].

-

In drone and helicopter terminology, the three rotations are known as yaw, pitch, and roll, respectively, and are referred to as heading, attitude, and bank in airplane terminology. ↩

Signal Definitions

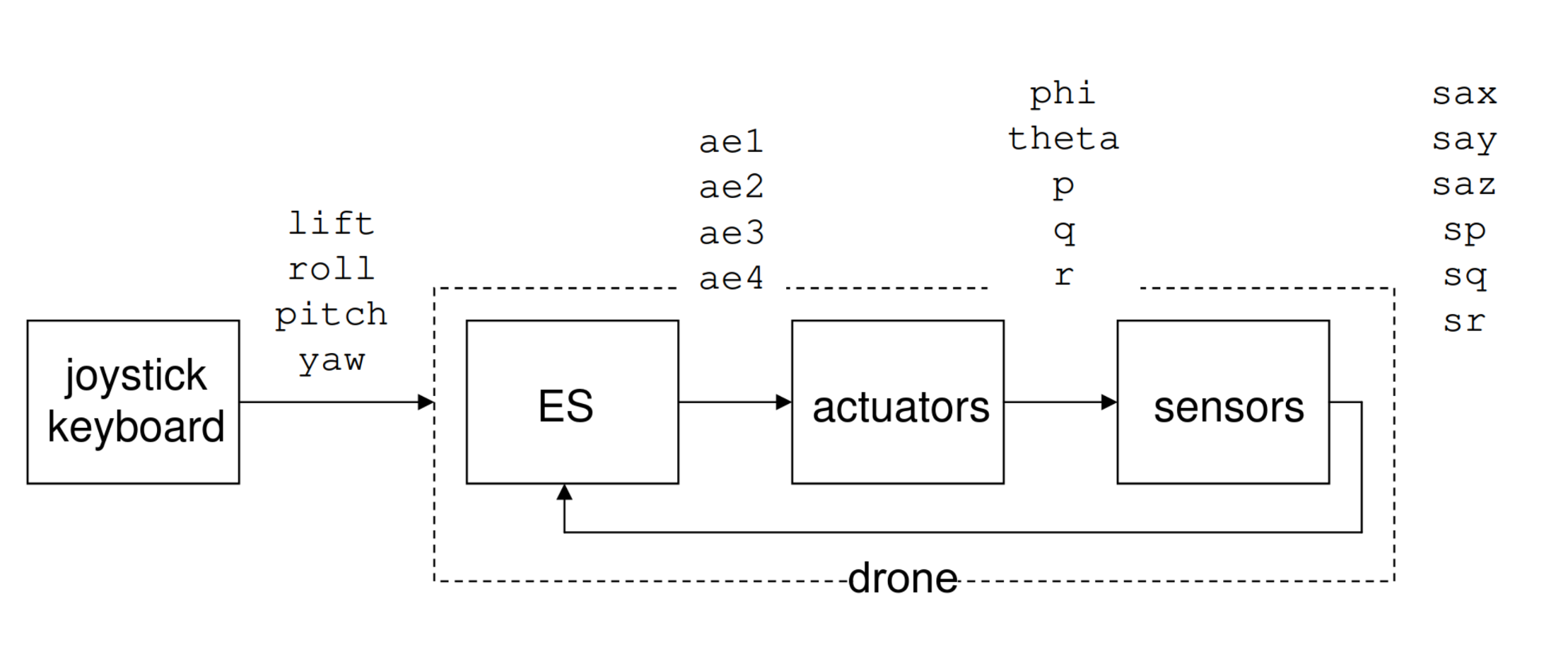

In the interest of clarity and standardization, in following we define some of the most important signal names as to be used in the project. We refer to the generic system circuit shown in Figure 1:

The four most important signals originating from joystick or keyboard are called:

lift(engine RPM, keyboard: a/z, joystick: throttle)roll(roll, keyboard: left/right arrows, joystick: handle left/right (x))pitch(pitch, keyboard: up/down arrows, joystick: handle forward/backward (y))yaw(yaw, keyboard: q/w, joystick: twist handle)

The actuator (servo) signals to be sent to the drone electronics are called

ae1(rotor 1 RPM)ae2(rotor 2 RPM)ae3(rotor 3 RPM)ae4(rotor 4 RPM)

The sensor signals received from the drone electronics are called

sp(p angular rate gyro sensor output)sq(q angular rate gyro sensor output)sr(r angular rate gyro sensor output)sax(ax x-axis accelerometer sensor output)say(ay y-axis accelerometer sensor output)saz(az z-axis accelerometer sensor output)

Note that the sensor signals sax, sp etc. do not equal the actual drone angle and angular velocity θ,p etc.

as defined earlier. Just like other drone state variables such as x, y, z, u, v, w, etc., they cannot be directly

measured (unfortunately), which is why the sensors are there in the first place. Rather, the sensor signals are

an (approximate!) indication of the real drone state, and proper determination (recovery) of this state are an

important part of the embedded system. For simulation purposes [4], where the true drone state variables are

obviously available (i.e., simulated), the drone state variables are called

x,y,zphi,theta,psi(φ, θ, ψ)X,Y,ZL,M,Nu,v,wp,q,r

which implies that these names must be used when referring to these signals in the Rust code.

Interface Requirements

The following prescribes the minimum interface requirements. Apart from these requirements, appropriate on-screen feedback on drone and embedded systems performance earns credits.

Joystick map

| joystick input | result |

|---|---|

| joystick throttle up/dn | lift up/down |

| joystick left/right | roll up/down |

| joystick forward/backward | pitch down/up |

| joystick twist clockwise/counter-clockwise | yaw up/down |

| joystick fire button | abort/exit |

The other buttons can be used at one’s own discretion.

Keyboard map

| key | result |

|---|---|

| ESC, SPACE BAR | go to safe mode, through panic mode |

| 0 | Safe mode |

| 1 | Panic mode |

| 2 | Manual mode |

| 3 | Calibration mode |

| 4 | Yaw-control mode |

| 5 | Full-control mode |

| 6 | Raw Sensors Full-control mode |

| 7 | Height control mode |

| 8 | Wireless mode |

| a/z | trim throttle up/down |

| left/right arrow | trim roll up/down |

| up/down arrow | trim pitch down/up (cf. stick) |

| q/w | trim yaw down/up |

| u/j | trim yaw control P up/down |

| i/k | roll/pitch control P up/down |

| o/l | roll/pitch control D up/down |

The keyboard controls for lift, roll, pitch and yaw should act as a fixed offset to the joysticks input. This is useful to correct any small deviation your drone may have. If your joystick throttle is all the way down, then the motors should be turned off, even if your throttle trim is non-zero. The full control P and D control parameters simultaneously apply to both the pitch and roll cascaded P controllers, which are identical. The other keys can be used at own discretion.

Led indicators

Blue LED: blink at a 1s rate to indicate the program is running properly

The remaining LEDs can be used at one’s own discretion (typically used for status/debugging).

In the template, the mapping of the leds is as follows:

- red blinking: you probably have a panic

- blue blinking: your code is probably running fine

- yellow on + red blinking: this happens during initialization. If initialization fails, this is never turned off. If a panic happens and yellow is on, initialization likely failed

- green on + red blinking: an allocation happened causing a panic.

Lithium Polymer (LiPo) Battery Usage

Lithium polymer batteries are now being widely used in hobby and UAV applications. They work well because they can hold a large amount of current and are lighter than nickel metal and ni-cad batteries. But with this increase in battery life come potential hazards.

Use only LiPo Chargers with Error Detection

It is always recommended that you charge your lithium polymer batteries with a battery charger specifically designed for lithium polymer batteries. As an example, you would charge 3 cell Kokam lipoly batteries (11.1 volts) at 1.5 amps. This type of charger will have automatic shutoff capabilities that will protect the batteries from over charging or charging a damaged battery. If you attempt to charge a damaged battery with a non-safe charger the battery could catch fire or burst.

Limit LiPo Discharge to rate

Another potential hazard happens when lithium polymer batteries are discharged too fast. If they do so they could potentially catch fire and burst. LiPo batteries are rated with a continuous and max discharge rating. For example a 1500mAh LiPo may be rated at 10C continuous current should not be discharged at more than 15A (1.5A * 10C = 15A) of current.

LiPo Maximum Temperature

Lithium polymer batteries should not be operated in temperatures exceeding 60 degrees Celcius / 140 degrees Fahrenheit. In the event of a crash, lithium polymer batteries should be carefully inspected for any punctures, puffiness of the batteries, or short circuits. If any of those exists, do not use the batteries. Dispose of them properly.

LiPo Minimum Voltage

A very important consideration is to NOT allow the battery voltage to drop too low. The amount that the battery voltage can drop is dependent on your system. If you are using a 3-cell lithium polymer pack, we recommend you land the UAV when the battery voltage drops under 10.5 volts. If the battery voltage drops below 8 volts the batteries could become permanently damaged. Follow manufacturers recommended guidelines, and make sure that your software monitors.

Damaged LiPo Symptoms:

- Charger does not allow the battery to charge

- Batteries are puffy to the touch (do not use!)

- Punctures in the battery cell

- Short circuits

Properly LiPo Dispose Procedure:

- Discharge all cells to recommend cut off voltage of 3 Volts per cell

- Place in salt water for several hours

- Apply tape to one of the terminals and dispose of in the trash.

- DO NOT INCINERATE

Parallel Battery Configuration

Use Equally Charged LiPo’s in Parallel Configurations. Some UAV battery configurations require the parallel connection of two or more Lithium Polymer (LiPo) batteries. In this case, LiPo batteries must have the same or very similar output voltage. Parallel connection of two batteries of significantly different output voltage (one is more fully charged than the other) will cause one battery to discharge into the other battery at a high current rate. This may cause damage to either of the batteries and potentially cause fire or the battery to burst. It is strongly recommended that in applications requiring parallel connection of LiPo batteries, only batteries of equivalent technology, capacity, cell count, and output voltage be used. A good assumption is that fully charged LiPo batteries of the same type are safe to connect in parallel.

Avoid Flying in Wet Conditions

UAVs will fly in somewhat adverse conditions. We, however, do not recommend flying in falling precipitation. Flying with snow on the ground, planes & components will get wet (thus affecting performance) and must be dried prior to subsequent flights. Note that for the course we will never be flying outside, so this is unlikely to be an issue.

Hardware setup

Carbon drones:

- Frame: Turnigy Talon V2.0 (550mm)

- Motors: Sunnysky X2212-13 980kV

- ESC: Flycolor 20A BCHeli 204S Opto

- Sensor module: GY-86 (10 DOF)

- three-axis gyroscope + triaxial accelerometer: MPU6050

- compass: HMC5883L

- barometer: MS5611

- RF SoC: nRF51822

- wireless: Bluetooth Low Energy

- microcontroller: ARM Cortex M0 CPU with 256kB flash + 16kB RAM

Aluminium drones:

- Frame: Turnigy Talon V2.0 (550mm)

- Motors: Sunnysky X2212-13 980kV

- ESC: Flycolor 20A BCHeli 204S Opto

- Sensor module: GY-86 (10 DOF)

- three-axis gyroscope + triaxial accelerometer: MPU6050

- compass: HMC5883L

- barometer: MS5611

- RF SoC: nRF51822

- wireless: Bluetooth Low Energy

- microcontroller: ARM Cortex M0 CPU with 256kB flash + 16kB RAM

Miscellaneous

Just a couple of links that might interest you

- How Microcontrollers Work

- UNIX Tutorial for Beginners

- CESE4000: Software Fundamentals Rust Lectures

- CESE4015: Software Systems Advanced Rust Lectures

- The Rust Book

- Control Theory Book

Acknowledgements

The founding father of this course is Arjan van Gemund, who is now enjoying the fine life of a retired professor. He conceived the lab back in 2006, and guided numerous people in implementing essential building blocks (HW and SW) for several generations of quadcopters. Marc de Hoop, Sijmen Woutersen, Mark Dufour, Tom Janssen, Michel Wilson, Widita Budhysusanto, Tiemen Schreuder, Matias Escudero Martinez, Shekhar Gupta, and Imran Ashraf all contributed in one way or another. Despite their fine work, it was decided to overhaul the FPGA-based quad-rotor platform (from Aerovinci) completely, as it was costly to maintain and technology had moved on quite a bit. Cheap, powerful microcontrollers readily available, and accurate sensing out of the box allowed for a radical new design as undertaken by Ioannis Protonotarios (HW + low-level software) and Sujay Narayana (software beta tester), leading to conception of the Quadrupel drone in 2016.